UPDATE: for those visiting here via links, see my recent letter regarding Dr. Richard Muller and BEST.

I have some quiet time this Sunday morning in my hotel room after a hectic week on the road, so it seems like a good time and place to bring up statistician William Briggs’ recent essay and to add some thoughts of my own. Briggs has taken a look at what he thinks will be going on with the Berekeley Earth Surface Temperature project (BEST). He points out the work of David Brillinger whom I met with for about an hour during my visit. Briggs isn’t far off.

Brillinger, another affable Canadian from Toronto, with an office covered in posters to remind him of his roots, has not even a hint of the arrogance and advance certainty that we’ve seen from people like Dr. Kevin Trenberth. He’s much more like Steve McIntyre in his demeanor and approach. In fact, the entire team seems dedicated to providing an open source, fully transparent, and replicable method no matter whether their new metric shows a trend of warming, cooling, or no trend at all, which is how it should be. I’ve seen some of the methodology, and I’m pleased to say that their design handles many of the issues skeptics have raised and has done so in ways that are unique to the problem.

Mind you, these scientists at LBNL (Lawrence Berkeley National Labs) are used to working with huge particle accelerator datasets to find minute signals in the midst of seas of noise. Another person on the team, Dr. Robert Jacobsen, is an expert in analysis of large data sets. His expertise in managing reams of noisy data is being applied to the problem of the very noisy and very sporadic station data. The approaches that I’ve seen during my visit give me far more confidence than the “homogenization solves all” claims from NOAA and NASA GISS, and that the BEST result will be closer to the ground truth that anything we’ve seen.

But as the famous saying goes, “there’s more than one way to skin a cat”. Different methods yield different results. In science, sometimes methods are tried, published, and then discarded when superior methods become known and accepted. I think, based on what I’ve seen, that BEST has a superior method. Of course that is just my opinion, with all of it’s baggage; it remains to be seen how the rest of the scientific community will react when they publish.

In the meantime, never mind the yipping from climate chihuahuas like Joe Romm over at Climate Progress who are trying to destroy the credibility of the project before it even produces a result (hmmm, where have we seen that before?) , it is simply the modus operandi of the fearful, who don’t want anything to compete with the “certainty” of climate change they have been pushing courtesy NOAA and GISS results.

One thing Romm won’t tell you, but I will, is that one of the team members is a serious AGW proponent, one who yields some very great influence because his work has been seen by millions. Yet many people don’t know of him, so I’ll introduce him by his work.

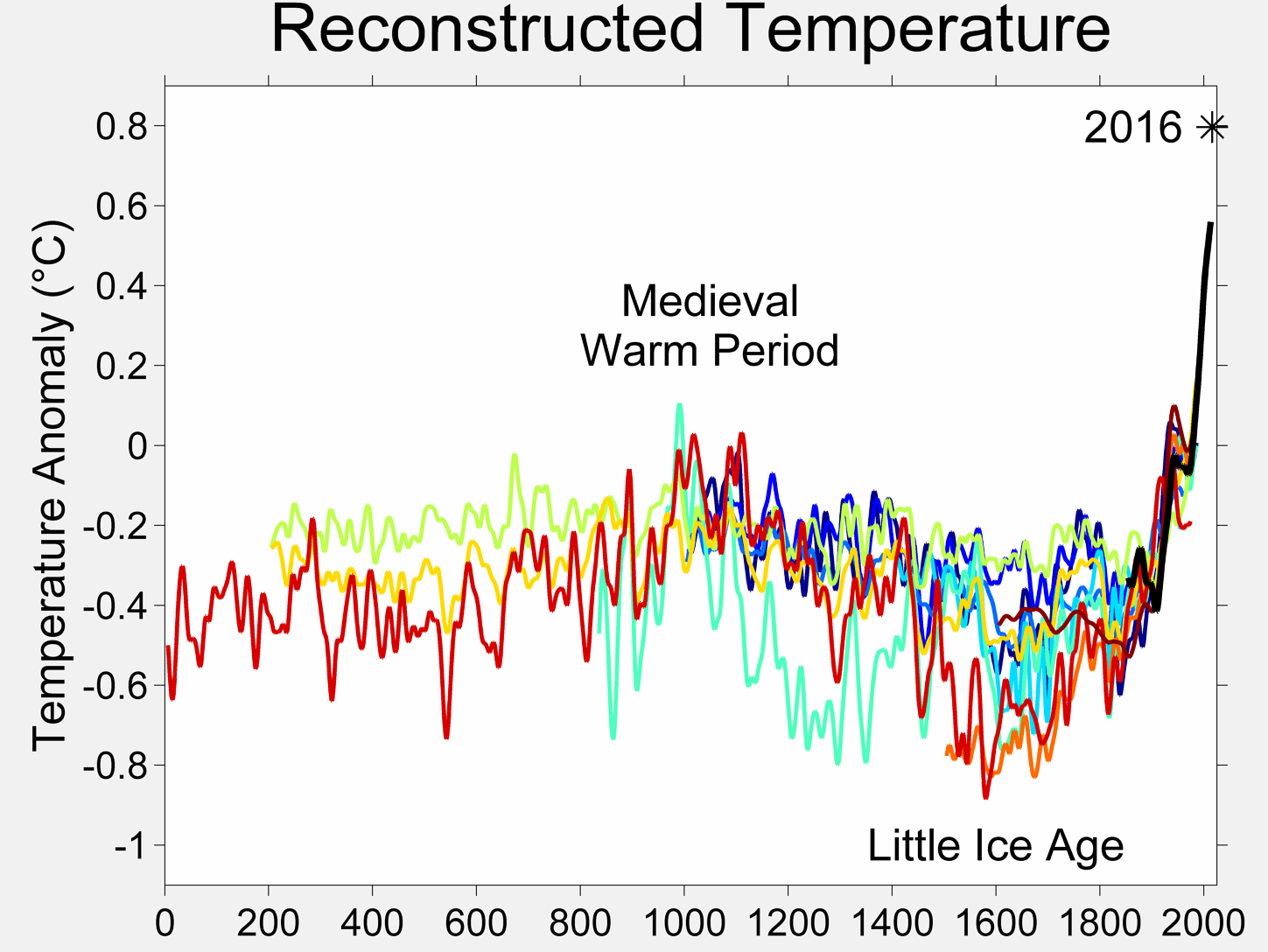

We’ve all seen this:

It’s one of the many works of global warming art that pervade Wikipedia. In the description page for this graph we have this:

The original version of this figure was prepared by Robert A. Rohde from publicly available data, and is incorporated into the Global Warming Art project.

And who is the lead scientist for BEST? One and the same. Now contrast Rohde with Dr. Muller who has gone on record as saying that he disagrees with some of the methods seen in previous science related to the issue. We have what some would call a “warmist” and a “skeptic” both leading a project. When has that ever happened in Climate Science?

Other than making a lot of graphical art that represents the data at hand, Rohde hasn’t been very outspoken, which is why few people have heard of him. I met with him and I can say that Mann, Hansen, Jones, or Trenberth he isn’t. What struck me most about Rohde, besides his quiet demeanor, was the fact that is was he who came up with a method to deal with one of the greatest problems in the surface temperature record that skeptics have been discussing. His method, which I’ve been given in confidence and agreed not to discuss, gave me me one of those “Gee whiz, why didn’t I think of that?” moments. So, the fact that he was willing to look at the problem fresh, and come up with a solution that speaks to skeptical concerns, gives me greater confidence that he isn’t just another Hansen and Jones re-run.

But here’s the thing: I have no certainty nor expectations in the results. Like them, I have no idea whether it will show more warming, about the same, no change, or cooling in the land surface temperature record they are analyzing. Neither do they, as they have not run the full data set, only small test runs on certain areas to evaluate the code. However, I can say that having examined the method, on the surface it seems to be a novel approach that handles many of the issues that have been raised.

As a reflection of my increased confidence, I have provided them with my surfacestations.org dataset to allow them to use it to run a comparisons against their data. The only caveat being that they won’t release my data publicly until our upcoming paper and the supplemental info (SI) has been published. Unlike NCDC and Menne et al, they respect my right to first publication of my own data and have agreed.

And, I’m prepared to accept whatever result they produce, even if it proves my premise wrong. I’m taking this bold step because the method has promise. So let’s not pay attention to the little yippers who want to tear it down before they even see the results. I haven’t seen the global result, nobody has, not even the home team, but the method isn’t the madness that we’ve seen from NOAA, NCDC, GISS, and CRU, and, there aren’t any monetary strings attached to the result that I can tell. If the project was terminated tomorrow, nobody loses jobs, no large government programs get shut down, and no dependent programs crash either. That lack of strings attached to funding, plus the broad mix of people involved especially those who have previous experience in handling large data sets gives me greater confidence in the result being closer to a bona fide ground truth than anything we’ve seen yet. Dr. Fred Singer also gives a tentative endorsement of the methods.

My gut feeling? The possibility that we may get the elusive “grand unified temperature” for the planet is higher than ever before. Let’s give it a chance.

I’ve already said way too much, but it was finally a moment of peace where I could put my thoughts about BEST to print. Climate related website owners, I give you carte blanche to repost this.

I’ll let William Briggs have a say now, excerpts from his article:

=============================================================

Word is going round that Richard Muller is leading a group of physicists, statisticians, and climatologists to re-estimate the yearly global average temperature, from which we can say such things like this year was warmer than last but not warmer than three years ago. Muller’s project is a good idea, and his named team are certainly up to it.

The statistician on Muller’s team is David Brillinger, an expert in time series, which is just the right genre to attack the global-temperature-average problem. Dr Brillinger certainly knows what I am about to show, but many of the climatologists who have used statistics before do not. It is for their benefit that I present this brief primer on how not to display the eventual estimate. I only want to make one major point here: that the common statistical methods produce estimates that are too certain.

…

We are much more certain of where the parameter lies: the peak is in about the same spot, but the variability is much smaller. Obviously, if we were to continue increasing the number of stations the uncertainty in the parameter would disappear. That is, we would have a picture which looked like a spike over the true value (here 0.3). We could then confidently announce to the world that we know the parameter which estimates global average temperature with near certainty.

Are we done? Not hardly.

Read the full article here

Anthony, this sounds positive. Let’s hope for the best and wait for the results.

Anthony, thanks for the info about the BEST project. I have been waiting for an update from you on it. On thing for sure is that having so many different disiplens(sic) of scientist, it will bring about a result, good or bad, that will be ROBUST in every respect. Joe Romm and the team will no doubt have a field day with anything that is produced by the BEST team, a good name for them and it will piss off Romm and company(who cares). Looking forward to more on the project.

When I was searching for a signal in noisy data, I knew that I was causing it. The system was given a rapidly firing regular signal at particular frequencies. By mathematically removing random brain noise, I did indeed find the signal as it coursed through the auditory pathway and it carried with it the signature of that particular frequency. The input was artificial, and I knew what it would look like. It was not like finding a needle in a haystack, it was more like finding a neon-bright pebble I put in a haystack.

Warming and cooling signals in weather noise is not so easy to determine as to the cause. Does the climate hold onto natural warming events and dissipate it slowly? Does it do this in spurts or drips? Or is the warming caused by some artificial additive? Or both? It is like seed plots allowed to just seed themselves from whatever seed or weed blows onto the plot from nearby fields. If you get a nice crop, you will not be able to say much about it. If you get a poor crop, again, you won’t have much of a conclusion piece to your paper. And forget about statistics. You might indeed find some kind of a signal in noise, but I dare you to speak of it.

This is my issue with pronouncements of warming or cooling trends. Without fully understanding the weather pattern variation input system, we still have no insight into the theoretical cause of trends, be they natural or anthropogenic. We have only correlations, and those aren’t very good.

So just because someone is cleaning up the process, doesn’t mean that they can make pronouncements as to the cause of the trend they find. What goes in is weather temperature. The weather inputs may be various mixes of natural and anthropogenic signals and there is no way to comb it all out via the temperature data alone before putting it through the “machine”.

In essence, weather temperature is, by its nature, a mixed bag of garbage in. And you will surely get a mixed bag of garbage out.

Anthony Watts said “……until our upcoming paper and the supplemental info (SI) has been published. ”

When ? Where?

REPLY: It is in late stage peer review, I’ll announce more when I get the word from the journal. I suspect there will be another round of comments we have to deal with, but we may get lucky. Bear in mind all the trouble Jeff Condon and Ryan O’Donnell had with hostile reviewers getting their work out. – Anthony

I hope they collect sunshine hours. Global brightening/dimming is certainly capable enough of changing the temperature.

People attack what they fear.

So very happy to see that WUWT (the Website) is not resting on its laurels and is moving out with great dispatch as ever. The BEST project sounds very promising indeed. Your endorsement counts mucho. Take care of yourself Big Guy; don’t push your own envelope too hard, or too far, too fast.

I am greatly intrigued by this data. It has been frustrating that there has not been a good standard yet for combining the large data in a consistent manner. Curiosity is building.

Hope you enjoyed your trip Anthony.

The layout of the post seems to have got mangled though:

The fifth paragraph below the wikipedia graph ends in mid-sentence.

and

Brigg’s first graph and the three paragraphs above it are missing.

If done right this is the best development in a long time.

Here’s hoping they can find data sources uncontaminated by Hansen and friends…

Since many tried to collate existing records and came up with something similar to HadCRUT, the point is more which stations to select than how to make an average of them. I would prefer one thousand rural stations over 3,000 of whatever quality.

I still think the SST satellite record like the Reynolds OI.v2 is pretty good for the modern era, just too short.

I am truly thankful that someone other than me does all this number crunching. Though I have no doubts I could, in time, wade through it, it is not my cup of tea. The big question for me, coming from an instrument background, is whether or not the original data is a valid measure of temperature for that location.

I too seem to have become persona non grata over at Climate Progress (is it funded by Colonel Gaddafi I wonder, for all its diatribe about being nonpartisan it certainly acts in a partisan manner?) I only asked if they could stick to the science and try to leave the playground banter to the more juvenile blogs. I know how naive of me! Apparently they think that blocking someone is the same as making them disappear, how wrong they are.

Ah, my proofreading skills are terrible, I see the “excerpts” and “…” now =)

I’ll eat my hat if the BEST result isn’t essentially in agreement with NOAA and GISS.

There’s too much at stake for too many scientists to back-pedal at this point in time. This is an establishment project headed by an AGW proponent at one of the most famously leftwing universities in the world. It’s like having cops check the work of other cops – they all stick together through thick and thin – brotherhoods are like that.

Plus the data isn’t that damn hard to analyse. It is almost certainly warmer now than it was in 1880. Reanalysis won’t change much of anything. If it did it already would have been done. So between a basic distrust of any team of establishment scientists rocking the boat and a basic belief that it’s about 0.7C warmer now than 130 years ago there’s hardly a chance that anything will change.

Moreover, those who don’t believe it’s warmer now will still not believe it’s warmer now and those who do believe will continue to believe. Contrary to popular belief in skeptic blogs there are a few basic things that stand out as credible

– it’s warmer now by a half degree or so than it was 130 years ago

– CO2 is a greenhouse gas that has the physical potential to raise surface temperatures

-anthropogenic activities have raised atmospheric CO2 from 280ppm to 380ppm

Most everything else is arguable except for the beneficial effects of a warmer planet and higher CO2 on the primary producers (green plants) in the food chain.

“And, I’m prepared to accept whatever result they produce, even if it proves my premise wrong. I’m taking this bold step because the method has promise. So let’s not pay attention to the little yippers who want to tear it down before they even see the results. I haven’t seen the result, nobody has, not even the home team, but the method isn’t the madness that we’ve seen from NOAA, NCDC, GISS, and CRU, and, …”

… and it still has not been tested, vetted, examined, and otherwise validated by the world.

It is premature for you to assign unyielding faith to this method, whatever it is. Do not be lulled into investing yourself in this process, and its result. Your bold step should not be made on promise, but on delivery.

Perhaps there is an Anthony Watts out there who will point out the deficiencies of the new method, once that method is made known. If so, it would be best if that Anthony Watts were you …

OK, sounds great. But what about the ARGO ocean buoy data, which is not often mentioned?

Also, there would be a simple way to get data from any point on the land by use of modified ARGO devices and circuitry, presumably with solar power panels. They could be placed anywhere in remote locations, thus obviating the urban heat island effects. And, the transmission of data to satellites would be automatic, so there would be no observer problems.

Good stuff, and very much what I prefer to read about: attempts at actual science and less about the bickering.

Will we get answers? Probably not, but a reduction in the wrong questions might help us grope a few steps closer to the exits in the darkened room of climate science.

Any predictions on BEST results? I’ve just created a post dealing with predictions:

http://climatequotes.com/2011/03/05/prediction-on-best-temperature/

I guess that the warming will be less than the other three, but still substantial.

If it’s really science, then let the chips fall where they may. However, I distrust temperature analysis as a means of determining whether the Earth as a whole is heating, cooling, or remaining the same. High temperatures can often be more an indicator of heat shedding mechanisms than of global warming. It’s the net energy flux that counts.

The challenge we face is measuring a very tiny putative drift in a widely-ranging signal with a high degree of chaos, superimposed on an ice-age rebound trend along with multidecadal swings and random volcanic spurts of largely unknown effect. Nor will the presence of this drift, if established with any statistical certainty, prove the AGW case. Correlation is not causation, and no amount of hand-waving will make it such.

In other words, it’s the Watts.

“in the land surface temperature record they are analyzing”

Is BEST just land surface based? There has been considerable concern expressed regarding the handling of the SST/Ocean data, does BEST cover this very, very important area?

REPLY: Land first, sea later. – Anthony

REPLY: Land first, sea later. – Anthony

Thanks looking forward to it all.

I have to agree with Brad above, if they’re using data filtered from Hansen et.al. their results will be questionable; however, I’m getting the impression from Anthony that he’s pretty sure of their method so I’m hoping he also covered this concern when he talked to his source. I’m looking forward to seeing what the BEST team come up with, but I’m not going to accept anything coming out of academia without a through review of their methods and sources. I just don’t trust them anymore.

I’m intrigued by the promise that you see in BEST and look forwards to seeing what they generate.

I’ll let the Science speak for itself as usual

If you want a number that tells you the air temperature of a location with the noise smoothed out just measure the ground temperature at some appropriate depth, but if you want to know the global SURFACE temperature you better take into account the vast pool of polar water in the deep ocean. Temperature of South African mine at 4000 feet = approx 65 C; temperature of vast areas of the ocean at 4000 feet = approx 4 C. How did that happen?

Quote from: http://welldrillingschool.com/courses/pdf/geothermal.pdf

“Low grade geothermal energy [in contrast to high temperature geothermal energy of hot springs] is the heat within the earth’s crust. This heat is actually stored solar energy.” This interesting document also points out that in 1838 a careful study by the Royal Observatory of Edinburgh “showed that temperature variation at a 25 foot depth to be about 1/20 of the surface variation.” I would seem that the deeper you go the closer you come to the average annual temperature.

It does look very promising. But is there a possibility that the surface temperature data will turn out to be so poor that it is impossible to draw any robust conclusions from it? If so, how likely is that to be the case?

David Brillinger is an expert in time series. It would be very interesting to see what the BEST project will do to appraise the issues surrounding compliance with the Nyquist-Shannon Sampling Theorem. In both space and time.

I have mentioned this on quite a number of occasions here on WUWT. It is an essenial point with regard to interpreting the shape of the signal from sampled data. Have a look at “aliasing” to see what can happen to the interpretation of sampled data when the sampling rate is poorly selected.

I should repeat – it is not the same issue as statistical sampling and convergence to underlying properties But on that topic, it would also be interesting to see where BEST stands in the issue of stationarity.

His method, which I’ve been given in confidence, and agreed not to discuss

is somewhat disturbing. Smacks a la Corbin or worse. One may hope that the method would eventually be disclosed, otherwise it may be time for a FOIA.

REPLY: Leif, that’s really out of line, and I’m disappointed that you would go there with such a heavy-handed comment.

Have you never discussed some idea, method or process with a colleague in confidence, holding that confidence until they had a chance to publish their work in paper? As indicated in the article, there will be full disclosure once the project is ready. I have no reason to believe otherwise. Dr. Muller knows that I and many others will publicly eviscerate him should those promises of full disclosure and replicability not be kept.

– Anthony

I must say I second Pamela Gray.

The climate will keep on changing.

Our problem is we do not know what causes it to change and how.

It seems to me a lot of hassle with no real point.

Beesaman says:

“I too seem to have become persona non grata over at Climate Progress (is it funded by Colonel Gaddafi I wonder, for all its diatribe about being nonpartisan it certainly acts in a partisan manner?)”

I can sympathize.

Climate Progress is funded by someone actually worse than Col. Gaddafi: George Soros. That’s why CP is so heavily partisan, and why it censors out honest scientific skeptics. Soros’ ultimate goal is a totalitarian world government.

Joe Romm is just a Soros sock puppet. He’s bought and paid for, so he does exactly what George tells him to do. You will never get the true climate picture at CP, because it is a climate propaganda echo chamber populated by mutual head-nodders. If contrary views can’t be posted at a blog, any time spent there is wasted.

Are there some scientists participating in this project who have created physical hypotheses and produced empirical research which is generally accepted in that specialty as showing that the hypotheses are reasonably well-confirmed?

It will be good just to see a climate project whose methods are reproducible, whose data and meta data are available. No matter what it shows it will at least be usable research.

Pamela Gray says:

March 6, 2011 at 8:16 am

What Pamela said. Extremely well stated, Ms. Gray.

Leif Svalgaard says:

March 6, 2011 at 10:05 am

The project will be worthless if all data and metadata is not published. I hope it will be.

Isn’t kind of ironic that that colorful hippie artwork shows that the temperature has been below normal for “98%” of the time for its complete 2 000 year time frame.

So essentially if the next 2 000 years is slightly above normal for “98%” the complete 4 000 year time frame will produce a normal average. :p

Andrew Zalotocky says:

March 6, 2011 at 9:45 am

It does look very promising. But is there a possibility that the surface temperature data will turn out to be so poor that it is impossible to draw any robust conclusions from it? If so, how likely is that to be the case?

That in itself will be an excellent conclusion.

“Global Warming Art”; i came across that before, a warmist referred to one wikipedia graph as a source and i looked where it came from because i thought it was one of William Connolleys artworks but it turned out to be from “Global Warming Art” which gave me an even creepier felling. wikipedia links to this website for “Global Warming Art”:

http://www.globalwarmingart.com/

It looks defunct ATM. Don’t know whether to trust these guys or this BEST thing.

REPLY: they have a web database error, just needs a rebuild. I’ll let them know – Anthony

I apologize for posting a downer, but I cannot see any good coming from the BEST project. All they are doing is reanalyzing statistical data on temperature. But there is no great disagreement on temperature increase over the last century. The disagreements are on the reliability of the data and the cause of the rise. (Of course, there is the matter of Hansen constantly decreasing the warmth of the Thirties and increasing that of the Nineties, but I doubt that they tackle that.)

Consequently, the BEST project will come up with a number that is close to Hansen’s number and once again we will hear trumpeted the claim that there is a scientific consensus on Hansen’s work. The BEST project is an attempt to revive the meme of scientific consensus.

Briggs has a link to a UK Guardian article:

http://www.guardian.co.uk/science/2011/feb/27/can-these-scientists-end-climate-change-war

where Muller commits to posting all of the data and methods online. Judith Curry is mentioned as a member of BEST also. Steve McIntyre is quoted as saying “anything that [Muller] does will be well done”.

I look forward to the results.

As I’ve been “forced” to think about “temperature” and “average temperature”…etc.,

I’ve also been forced to think of my ENGINEERING BACKGROUND and realized that these efforts are essentially MAGNIFICENT FRAUDS from the get go.

Let’s use this as an example. I take a temperature at my house. (Right now, 30 F). I take it in two hours…a front comes through, it’s 25 F. Normally that’s the “peak temperature” of the day. Is my temperature today, 25 F, or 30 F? Is that my “maximum”? What defines what temperature is “significant”.

Likewise, what do I use? Surfaces station records? (See http://www.surfacestations.org) Ship’s logs? (Again, WHEN was the reading taken?) Tree ring proxies (see climateaudit.org), ice core and O18/O16 ratios (dubious from the get go)?

It all comes down to what ENERGY CONTENT THERE IS, and which way THAT is trending.

But, that…like the Seven Bridges of Königsberg problem, becomes irreducible or insoluble. The solution to that, create a MEANINGLESS metric that you convince (the ignorant and the academically gullible) that you can solve.

Alas, this work does not: build houses, drill oil wells, make nuclear plants, make medical devices. (Do I sound INSANE? No, there is a SANITY to this comment. It is meant to point out this POINTLESS EFFORT, which DOES NOT HELP HUMANITY versus things that do.)

Let’s hope these folks might find something MEANINGFUL to do with their short (we are all in that realm) lives before they pass the torches on to the next generation.

Thanks for this Anthony. What you describe is what we have all hoped for. Real science, that attempts to achieve objectivity. Real science, that uses empirical measurements to calibrate. Real science, based on deductive reasoning in the spirit of Karl Popper. Real science, that informs debate not becomes the debate. As scientists or those who would call themselves such, we must at the end remain true to our philosophy for only then can we stand with pride. So to that I would add this sage advice:

“Polonius:

This above all: to thine own self be true,

And it must follow, as the night the day,

Thou canst not then be false to any man.

Farewell, my blessing season this in thee!

Laertes:

Most humbly do I take my leave, my lord.

Hamlet Act 1, scene 3, 78–82”

Anthony, as a person who above all knows the importance of investigating the actual environment of each surface station, and the difficulty (such that you had to enlist scores [hundreds?] of volunteers to help), how can you accept this BEST approach on faith? Are they investigating the actual conditions of any of their 30,000 stations? Will they know when or where a station was moved and records were lost concerning it? I have written to them about my concerns and received no answer. Please please reassure me on this point. Without metadata such as you developed in the surfacestations project, the data are meaningless.

I think it will be nice to know the answer but will it really have any affect on the politics? Some people seem to not let ‘facts’ interfere with ‘The Cause’ . I don’t think it answer the real underlying question as to cause. [Just to be clear, I mean the thing(s) that effected the change(s) not ‘The Cause” ].

Stephen Richards says:

“The project will be worthless if all data and metadata is not published. I hope it will be.”

I agree. But it is only fair to allow them to withhold their data, methodologies, metadata, code, etc., until publication. It should all be archived publicly online at that point.

This is a golden opportunity to set an example of scientific transparency. If everything is publicly archived at publication, then it will be much harder for anyone else to argue that they shouldn’t have to do the same thing.

Thanks, Anthony, this is good news and sorely needed!

I’m terribly ambivalent about AGW, mostly because I’m such a critic of the methodologies employed by Jones/Mann/Briffa etc. I’ve often argued to reboot the whole process and start over, which earns me angry stares from nearly everyone in the climate science community.

The climate system is FAR more complex than the Hockey Team & their groupies (Gavin) want to admit, with many more influences (solar, galactic, physical, chemical, biological) than their models attest to. Let’s see what some clean data shows.

Mind you, these scientists at LBNL (Lawrence Berkeley National Labs) are used to working with huge particle accelerator datasets to find minute signals in the midst of seas of noise.

I commune with physicists from DOE’s Fermilab and can attest to their focus and dedication on dealing with amazingly complex data analysis, so this is a fantastic addition!

Let’s get the catastrophists away from the process entirely, and let the chips & data fall where they may. I can live with any conclusions if the science is sound.

“I’m prepared to accept whatever result they produce, even if it proves my premise wrong.”

I read that statement by Anthony as saying that he will approach the result with an open mind, not dismiss or accept it in advance.

I too will try to treat it on its merits, but I have very severe doubts that there is enough data of sufficient quality to give meaningful results over even the last ten decades (or maybe much less than that), let alone over any longer period.

I also have very severe doubts that the “land temperature”, which they are doing first, is as valuable a measure as the “sea temperature”. But then, there is even less data for that, isn’t there?

Probably the first thing I will look for in the results is their evaluation of uncertainty. If the older results are not shown with greater uncertainty, then I am going to have difficulty accepting anything.

“His method, which I’ve been given in confidence and agreed not to discuss”

I have no problem with that. It’s a work in progress. Obviously, the method will need to be fully documented with the results.

I await the results with interest but no great expectations.

1DandyTroll says:

March 6, 2011 at 11:09 am

Yo dude, there you go spreading all that logic about again!

Do you think it might catch on, especially in the “hippie artwork” world?

I still don’t see why you need a whole bunch of data points in the first place. We have the Central England Temperature, the Irish record of similar length, and probably a couple others. If you’re looking for long-term GLOBAL trends, they must show up in a consistent and well-calibrated record of two or three consistently rural places. If nothing else, simple data gives you a much better chance of pinning down the contaminating factors.

The big step forward with this project will be that the data and method will be published and available for inspection and critique. The reconstruction will be more credible than its predecessors for this reason alone. No more excuses about “data confidentiality” and losing the data.

If Global Warming causes Global Cooling, what then does Global Cooling cause? Snowball Earth?

I certainly hope their research goes far enough back to capture a full PDO cycle. My personal opinion is that any such project would need to go back to at least 1920 or so in order to capture temperatures over a full cycle and to put things into proper perspective.

Leif Svalgaard says:

March 6, 2011 at 10:05 am

His method, which I’ve been given in confidence, and agreed not to discuss

is somewhat disturbing. Smacks a la Corbin or worse. One may hope that the method would eventually be disclosed, otherwise it may be time for a FOIA.

FOIA is probably not applicable here. This effort is funded by foundations, including Charles Koch, of the Koch brothers, Bill Gates, and the Novim foundation and others.

It is a kind of inside job. Muller’s daughter, the CEO of his consulting firm, which does a lot of work for oil companies, is in charge of administration, and Rhode who was recently his graduate student, and worked at the Novim foundation, is the chief scientist on the project.

Despite that, it could end up being a legitimate effort. We will have to see what methods they use to analyse the data.

The Satellite data analysis of the troposphere, and the various surface temperature records seem to get pretty much the same results, so personally I don’t expect to see much of a difference between what they show and what has already been published.

I kind of wish Steve McIntyre was working on this project. At the least, I hope these people make all of their work available so McIntyre can check it (if he of course, wants to).

Atmospheric temperature is NOT equal to or proportional to atmospheric energy content.

Its nice to know these are warm fuzzy statisticians – but if they are using the incorrect metric what does that prove? Apart from the fact that they are as ignorant of enthalpy as ‘climate scientists’?

Now if they were using the measure of humidity also taken at the observing stations to come up with some integral of enthalpy and thus daily energy content that might be better. But atmospheric temperature proves little and is just used because it was what was measured.

As Logan says: March 6, 2011 at 9:11 am BEST would be far better off starting with ARGO metrics – they are measuring something that is directly proportional to energy content. It is imbalance in global energy content that is the problem that needs measuring.

At the end of the day, all I ask is that the science is done right, without political bias, and in a reproduceable & transparent manner. That way, if tough decisions need to be made, they can be made with confidence. To date, this simply hasn’t been the case. This sound like a significant step in the right direction & I applaud Anthony & others for supporting it. As for the

As for Romm et al , their lack of support is further evidence they could care less about the science & are only concerned about their own politics. They are their own worst enemy because the public can easily see the case I just laid out here.

Thanks Antony,

Any idea when we all get to see the result? The result is going public immediately rather than waiting to be peer-reviewed? I recall a previous post said it would be out by end of Feb.

Ian W says:

March 6, 2011 at 1:53 pm

No kidding. It doesn’t matter how good your statistics are, if you’re using the wrong formula to do the actual averaging you are getting garbage out.

Mark

eddieo says:

March 6, 2011 at 12:21 pm

The big step forward with this project will be that the data and method will be published and available for inspection and critique.

Like GISS and RSS?

eadler says:

March 6, 2011 at 1:15 pm

If there is a difference, it will be seen in the period preceding the satellite data. And there is a meaningful chance of this.

Even if this effort suggests the true peak surface temperature was more often found in the 1930s, it doesn’t really prove much of anything. But it could shine a cold skeptical light on the assertion that the last decade is unusually warm. This could be earth shaking.

Let’s see the ocean data- and by comparison to that, devise proxies from surface temperatures, and see what that tells us.

For sceptics to give support for this project could turn out to be grasping the poison chalice.

It could polish the tarnished reputations of the original AGW crowd.

When this reappraisal delivers a value for a late 20th century warming that broadly agrees with the official ‘consensus’,then it can be said that for all their obstructive secrecy and dubious methodology,Mann,Jones et al., were right,,,,,,,,,,nobody will remember how they got their results,just that they have been independantly confirmed.

Will the BEST project make any correction for the UHI effect? Or at least separate data from rural and urban stations?

Jordan @9:46am says “It would be very interesting to see what the BEST project will do to appraise the issues surrounding compliance with the Nyquist-Shannon Sampling Theorem. In both space and time.”

With many (most) distributions of data, compliance with Nyquist sampling theorem is NOT required.

For example, I can sample the power consumption of your house at random intervals of around once per hour. Even if you have many appliances that go on and off for intervals much shorter than an hour, after acquiring once per hour samples over several months, the statistics of instantaneous power usage of the samples will approach the actual statistics of true power usage. The sampled average power consumption will rather rapidly converge with the actual power consumption.

Nyquist sampling rate is only needed if I desire to estimate a point by point time history of instantaneous power usage.

This simple example should give a hint as to what is and what isn’t dependent upon high sampling frequency. I’ll leave it to others to comment upon specific distributions such as non-stationary stochastic ergodic processes.

Here we go again! For the umpteenth time, it does not matter if you discover that the earth is warming (it probably is), or cooling (it probably has been for 10 years or more and will continue to do so), or the same (as what?).

Keep your eye on the pea under the thimble.

It is the REASON for change.

I think maybe you cede a step too far when saying that you will accept the results because the approach has promise. Saying that you will take the results very seriously and give them a great deal of credence would be a better posture, I think.

That the approach has promise is no guarantee either that it is correct, or that indeed adequate data yet exists in any form to come to a “certain” conclusion. (And 95% confidence, notwithstanding the incessant touting of same by the IPCC CRU-Krew, is a few sigmas short of confidence, btw.)

So regardless of the outcome, distrust and verify.

I will second Anthony’s support for the BEST project. It’s what we’ve been calling for since 2007. From my contact with them, I’ll say that they have addressed all the concerns that I was working on, and the description of their method gives me a

a great deal of confidence.

More details ( if allowed) after my visit.

A prediction? we will know what we know now. the world is warming. The uncertainty bars will WIDEN. current methods underestimate the certainty (see briggs) we’ve known this for a long while, but the climate science community has resisted this.

UHI. with improved metadata you have a positive chance of actually finding a UHI

signal. It wont make the warming disappear.

.

Quite apart from the physical issue of enthalpy vs. temperature measurements, the outstanding issue in the land-station data is the time-dependent bias introduced into the records by intensifying urbanization/mechaniztion. So far, there is no indication how BEST will cope with that near-ubiquitous problem. Instead, we only hear irrelevant talk of using more stations and extracting signals from noise, as if these were the main obstacles to reliable estimation. “Global” results are not likely to change materially if only such tangential issues are resolved. The clerical task of compiling averages is, after all, quite trivial. It will be interesting to see how the academic BEST team, which lacks anyone with practical field experience, handles the very-much-nontrivial bias in the data base itself.

Ian W says:

March 6, 2011 at 1:53 pm

Atmospheric temperature is NOT equal to or proportional to atmospheric energy content.

Its nice to know these are warm fuzzy statisticians – but if they are using the incorrect metric what does that prove? Apart from the fact that they are as ignorant of enthalpy as ‘climate scientists’?

Now if they were using the measure of humidity also taken at the observing stations to come up with some integral of enthalpy and thus daily energy content that might be better. But atmospheric temperature proves little and is just used because it was what was measured.

As Logan says: March 6, 2011 at 9:11 am BEST would be far better off starting with ARGO metrics – they are measuring something that is directly proportional to energy content. It is imbalance in global energy content that is the problem that needs measuring.

Unfortunately the ocean heat is quite difficult to measure. A lot of corrections have been needed recently. Sampling the top 700M of ocean, worldwide, is not a piece of cake.

In fact if you look at the surface temperature record and ocean heat graph they have shown fairly parallel increases :

http://bobtisdale.blogspot.com/2009/10/nodc-corrections-to-ocean-heat-content.html

http://data.giss.nasa.gov/gistemp/graphs/Fig.A2.gif

Daily maximum and/or minimum temperatures are not the appropriate metric for the analysis of the global energy status, or its long or short term budget. It matters little how closely the temperature record matches reality or how much adjustment it has been subject to in order to confirm the IPCC’s remit. It remains the wrong metric.

At the following site, there are huge differences in the slopes of the graphs between GISS and Hadcrut and the satellite data from 1998 on.

http://hidethedecline.eu/pages/posts/status-on-global-temperature-trends-216.php

I would be very interested to see Frank Lansner’s graph of the new set from 1998 on when it is ready to see which of the above is closest. As well, I will be curious if 1998 is the warmest modern year or 2010.

@Juraj V. Mar 6, 2011 at 8:43 am:

Juraj, as I understand it, the BEST study will include all the stations and will take the siting of the stations into account (hopefully with a more accurate UHI adjustment than the 0.15C that Chang’s Chinese study led CRU to).

The bigger issue may be that they will publish (as I understand it) their data and methodology. If done, this will allow for sorting out the rural stations, either using the BEST methods or a method one thinks might be superior.

This study might allow us all to get past the “Great Dying Off of the Met Stations” issue. If it also gives solid data and transparent adjustments, those issues can be gotten past, too.

It is, of course, the consensus here that those three issues were never dealt with, and that dealing with them may turn out to make skepticism passé. Our bet is that BEST will show CRU to be dead wrong. Predictions like, “You’re wrong, suckah!” is what science is all about. Such antagonism is supposed to lead to better efforts , with their better results – and that is what we have all been yearning for.

Must be one hell of an idea if it makes Mr Watts go “DOH!” 🙂

” Theo Goodwin says: March 6, 2011 at 11:27 am

The BEST project is an attempt to revive the meme of scientific consensus. ”

Time will tell if they are attempting to revive scientific consensus or the scientific method. We can only hope for the latter and hold their feet to the fire if they don’t. That is the role of a skeptic. The fact that they know the likes of M&M are going to go over things with a fine tooth comb makes me give the BEST project the benefit of the doubt for now.

Charlie A wrote with high confidence:

“For example, I can sample the power consumption of your house at random intervals of around once per hour. Even if you have many appliances that go on and off for intervals much shorter than an hour, after acquiring once per hour samples over several months, the statistics of instantaneous power usage of the samples will approach the actual statistics of true power usage. The sampled average power consumption will rather rapidly converge with the actual power consumption.”

Time sampling at stations is not random. Spatial placement of stations is not random; they are placed near places where people live (and therefore expand). Weather flying over a station is not a white noise. True statistics and spectral limits of these processes are largely unknown. Total duration of random sampling must be much longer than the lowest period of weather-climate events, which is about a time between ice ages, if not much longer. In short, the simple example of sampling appliance power without formulating all necessary assumptions about statistical properties of the process and sampling gives a good example why the surface temperature numerology is so screwed.

Regarding the BEST project, the best these academics can do is to define proper measuring methodology. However, given that the sampling at one point of boundary layer of the tree-dimensional turbulent temperature field is no proxy for anything related to energy imbalance, even finest method will produce unphysical garbage.

If you check the sources for that Reconstructed Temperature chart, at least 6 of the 10 papers were from Hockey Team members, and the eleventh curve is Hadley CRU.

I disagree with those who claim the project is a waste. One of the fundamental, legitimate complaints of the skeptics has been that both the data base from which the purported AGW signal has been statistically teased and the methods of teasing out that signal are suspect. This project promises to expose both to the light of day for all to see. Let the chips fall wherever they fall in the pursuit of truth for its own sake! Arguments for or against AGW are farts in a whirlwind without a dependable, common point of departure. This project has the potential to provide that common point. What AGW proponents and skeptics do with that common point is another matter entirely.

Observation #1 – We have statements and/or inferences from various sources that the BEST Project’s data, methodology, code and all related documents will be provided without needing to be requested. Also, we have statements and/or inferences from various sources that those will be available to everyone, not just restricted to the academic/institutional science community. We shall see if those inferences materialize. Fair enough. That is the critical go-no-go check point of an open and transparent step in the science of GST time series analysis. NOTE: I do not see any discussion of whether those will be behind a paywall or not, but given the funding it might very well be behind a paywall.

Observation #2 – If the BEST Project is to be objectively independent from the past GST science, then its data sources must be the original raw data, not processed HADCRU, NOAA, GISS nor any other processed datasets. I do not recall that this has been stated and/or inferred about the BEST project. That is another the critical go-no-go check points of an open and transparent step in the science of GST time series analysis. It would be disappointing if the BEST project stands on the shoulders of previously processed data or stand on original raw data. Time will tell.

Observation #3 – Premises that have formed the fundamental basis the BEST Project have not, to my knowledge, been articulated even though the project has been in development and implementation for up to 1 year already. I mean, for example, premise statements like (my words), “We are fundamentally basing our approach on X, Y and Z.” What kind of X, Y and Z would I like to see? First I would like to see a statement of BEST Project statistical premises. Second I would like to a statement of their quality assurance approach, especially their internal processed for bias auditing. Third I would like to see a statement of the bounding conditions of their study, the known and unknown limits that they assume as a starting point. Fourth, I would like to see a statement of their context to the rest of the climate science body of knowledge. Without such kind premise statements, we just do not know anything to base our expectations of the result of the BEST Project on. Everything stated and/or inferred so far publically is fluffy like a cuddly warm kitten.

Observation #4 – Could this BEST Project be an “”Et tu, Brute?”” moment in GST climate science at these independent science blogs or a “Berlin, 19 November 1989” moment at these climate science blogs? Time will tell us which kind of moment it is, but the amount of openness with the climate blogs by the BEST project so far during its development and implementation phases does send up cautionary signals in my mind. The BEST Project has a right to its privacy during development and implementation. It also has had the right to have been free to be much more open during its development and implementation phases. They made a choice so far, it was a choice they had a right to make. My question is why they chose to restrict openness on the climate science blogs so far?

I deeply appreciate that WUWT has provided this forum to discuss climate science past, present and with expectations of the future.

John

I too have been waiting for what you find in that paper. It would make an interesting comparison with the result of Menne et al 2010 paper, which used only half of your surface stations data (without your permission) and presumably a different methodology to find that UHI effect has been properly accounted for in US temperature sets.

One can only hope Dr Matthew J Menne doesn’t turn out to be one of the peers reviewing your paper, Anthony. That would be insane.

Logan says at March 6, 2011 at 9:11 am

“OK, sounds great. But what about the ARGO ocean buoy data, which is not often mentioned?”

Just where is the Argo data?

Why is it not available every month like Dr. Spencer’s satelite data.

I keep asking, but nobody replies.

Surely sombody knows.

Where is the Argo data?

polistra says in part, on March 6, 2011 at 12:11 pm

“If you’re looking for long-term GLOBAL trends, they must show up in a consistent and well-calibrated record of two or three consistently rural places. If nothing else, simple data gives you a much better chance of pinning down the contaminating factors.”

I agree and have been trying to say just that for some time.

That’s why I have been looking at the longish record of certain Australian locations in some detail.

AusieDan – have you looked at

http://www.argo.ucsd.edu/Argo_data_and.html

(Argo data and how to get it)

or

http://www.pmel.noaa.gov/tao/elnino/drifting.html

(NOAA drifting buoy data – has a link to Argo Home Page amongst others)

I think it all comes down to attitude by those involved. That attitude is one of wishing to be absolutely certain that you are as confident as you can be in the results and humility — because you’ve had the experience that sometimes no matter how confident you are of the results you can still get it wrong

Now if you work for an institution looking for quarks, higgs bosons, or the “god particle” or whatever your press office have spun the latest research, you are probably used to feeling humble as the data doesn’t quite show what you thought it would, let alone what it was spun by the Press Office.

But if you are a climate “scientist” with a pretty third rate degree, in a subject where everyone else got there because there was too much competition in real science and your only “research” has been to state that temperature got warmer and predict it will get warmer in 100 years, and you don’t ever make testable predictions, and “peer review” means a buddy using- a spell checker … and basically there’s no way you can ever be wrong and in climate “science”, … they’ve never had that humbling experience of real scientists of finding their cherished theory was a load of tripe and then learning to be a lot more careful the next time.

And more than that, it’s the reputation of the whole institution that also counts. Sometimes by shear damned luck some scientists never get it wrong. But in a big institution like Berkeley there’s enough of them that sooner or later someone will drop and enormous clanger than affects everyone. So, they know that you can’t let rogue groups just make up the rules as they go along and so the whole institution just can’t afford to come up with a temperature series that some later work proves to be woefully biased. UEA on the other hand … reputation? What reputation?

‘A wow moment, why didn’t I think of that’

you have me chomping at the bit now Anthony. I love things like this, new ways of looking at stuff

EO

“there’s more than one way to skin a cat”.

Forgive the pedantry of a Charles Kingsley lover, but that famous ‘quote’ is

“There are more ways of killing a cat than choking it with cream”

This has the appearance of being the sort of sensible approach that we have long needed.

I look forward to reading the results of BEST. You can’t judge it before you see it.

eadler says:

March 6, 2011 at 4:33 pm

Unfortunately the ocean heat is quite difficult to measure. A lot of corrections have been needed recently. Sampling the top 700M of ocean, worldwide, is not a piece of cake.

In fact if you look at the surface temperature record and ocean heat graph they have shown fairly parallel increases :

http://bobtisdale.blogspot.com/2009/10/nodc-corrections-to-ocean-heat-content.html

http://data.giss.nasa.gov/gistemp/graphs/Fig.A2.gif

So because the right metric is difficult – continue using the wrong one?

I dont know, choosing method before analysing data, that is very unorthodox climate research.

What if it brings wrong results? Do we have enough tricks to correct them?

/sarc off/

Very interesting, not knowing is much worse than guessing wrong.

I am excited to see the results. Perhaps we finally have some “real” science going on? Would be a breath of fresh air. I do however reserve a bit of skepticism, but will maintain my optimism until all the results are compiled and scrutinized.

Thanks for the great post Anthony!

To Dave,

This statement is false. While I would agree on your other points, this one in particular bothers me as I have seen and read so much literature that specifically contradicts this statement. I no longer believe that CO2, or any other “greenhouse” gas can do as you claim. I no longer believe there is such a thing as a “greenhouse” gas, as a gas cannot A) trap anything, or B) back-radiate (which would be required).

Can a “greenhouse” gas “slow” cooling processes? .. The jury is still out on this one, but I would suggest that it may, but the lapse rate is extremely small, perhaps beyond the point of reasonable measurement.

To boldly suggest as you do, is false. There is no evidence to support your point, either empirically or experimentally. This point also happens to be the very lynch pin of the entire AGW argument, for which again, there is absolutely no empirical evidence to support.

We and the world should not accept a “fait accompli” from BEST. There are issues that must be discussed up front. For example, whose historical data will be used. At the time of Climategate, the canonical version of historical data back to 1850 had been assembled by Phil Jones and blessed by all Warmista. Is that still the case? Are we taking for granted the accuracy of Phil Jones’ (non-existent) data. If we are then I have no hope that BEST can produce something useful.

I have download Roy Spencer’s salellite data and graphed it against NCDC global land and ocean index.

Visually these agree very closely.

Correlation about 0.8 which means that about 64% of one, “explains” or coincides with the other, not bad for chaotic climate data.

To my mind that means the NCDC is not badly contaminated by UHI nor any of the shortcomings that we tend to concentrate upon.

Now the NCDC historic data keeps changing, which anoys me greatly.

Neveless it shows that since 1880, the temperature has risen by 0.6 degrees celsius per 100 years.

Overlaying this strong, yet gentle linear trend, is the well known 60 year up and down cycle.

There is no visual appearence of any recent acceleration in the long term trend, that you would expect if it was being driven by the increasing human CO2 emissions.

So I would not be surprised if the Best index more or less validates the existing ones and just corrects their more shaky work, without changing the general trend.

Also I am still expecting that the next 30 years will be cooler than the late 1990’s, but that the gentle undrelying trend will thereafter take the temperature back on up.

None of that speaks to the question of what is causing the rise to occur.

Several people here keep talking about measuring the heat balance rather than the temperature.

Roy Spencer has already done work on that.

While it is outside my area, it is probably where we should search for the truth.

The wild card in all this is the question of the quiet sun and its impact, if any, on the climate.

I have an open mind on that.

Interesting times.

Charlie A says: “With many (most) distributions of data, compliance with Nyquist sampling theorem is NOT required. ”

Charlie – you seem to have fallen straight into the trap of thinking that this is a problem of statistical sampling. I was trying to highlight the fact that it’s not.

(Even if it was just that, the assumption of convergence of statistical properties does not hold when a signal is aliased.)

If I am designing a digital control system, I cannot propose a control methodology based on statistical aggregates of measured variables. I don’t care what the average or standard deviation of a controlled varible is – I need to know where it is NOW in order to determine what control inputs are required to achieve a desired outcome.

And then I need to know again where it has got to within a determined interval in order to review the control inputs. I need this at an adequate frequency to be extremely confident that the sampled measured variable is not aliased.

That means it is the detailed pattern of the signal (in time, or perhaps in space) that myst be determined, not its statistical properties.

That’s when we have the benefit of designing a sampled data system at the outset – the first task is to understand the dynamical properties of the controlled variable(s) to ensure the sampling scheme is adequate for the purpose.

The BEST project doesn’t have the luxury of designing a sampled data scheme at the outset to measure the PATTERN of warming in recent times. Like others, they will need to use whatever data is available to them. So the problem needs to be re-phrased.

There is still a need to determine what sampling scheme (in space and time) which is adequate for the purpose of measuring a PATTERN without risk of aliasing.

The project still needs to determine the basic criteria for sampling the real environment for their purpose. It will then need to consider whether there are periods when the available data meets those criteria, and if there are periods when the criteria are not met.

If the available measurements do not meet the criteria, the measured trend will not be reliable as it may be subject to aliasing.

It is good to see that there is an expert on sampled data systems on the team (described as an expert in time series – but the same should apply to the spatial issues). I will be very interested to see how they have handled the above issues.

With regard to whether the climate is a stationary process, my point is more related to whether an adequate sampling regime at one point in time is also adequate at another.

sHx says:

March 6, 2011 at 10:41 pm

REPLY: It is in late stage peer review, I’ll announce more when I get the word from the journal. I suspect there will be another round of comments we have to deal with, but we may get lucky. Bear in mind all the trouble Jeff Condon and Ryan O’Donnell had with hostile reviewers getting their work out. – Anthony

I too have been waiting for what you find in that paper. It would make an interesting comparison with the result of Menne et al 2010 paper, which used only half of your surface stations data (without your permission) and presumably a different methodology to find that UHI effect has been properly accounted for in US temperature sets.

One can only hope Dr Matthew J Menne doesn’t turn out to be one of the peers reviewing your paper, Anthony. That would be insane.

One half of the surface stations is a pretty good sample considering the number. Using all of them probably wouldn’t change the conclusions much given the methodology that was used. This is not a judgment on the way Anthony was treated when they used his data, it is a statistical judgment.

It would make sense for Dr Menne to be a reviewer of the paper. It would improve the quality, assuming the editor is fair minded. In the case of the O’donnell the editor ended up publishing an improved version.

If you one attacks the institutions of science in the blogosphere, and then attempts to publish something, one should expect to get worked over a little. Scientists are only human. Turnabout is fair play.

Regarding station data. I am unimpressed by the history of these data plots. Once again, the simple seed plot works as a comparison. Let’s say, in our effort to develop a grand, all weather wheat seed, we scattered pots of seed round the world, paying little attention to their locations as a variable. Over time, some of our grad students left school, thus letting their seed plots go to hell in a handbasket. Others, thinking it would be more convenient to move their plot closer to the BBQ, did so, not bothering to record the move, or let us in on their plan before the move was completed. Along with way, all kinds of other things happened to these pots of seed. In the end, we had a vastly different set of variables, compared to when we started. Oh well. Let’s clean up what we have and let’s package up the product anyway, and say this is wheat that can withstand all temperatures, all altitudes, all seasons, etc. Would you buy futures in this wheat?

Wow Romm spouts a lot of nonsense in a very shrill and arrogant fashion.

Curse you Anthony for sending me back there.

I’m not holding my breath.

Roger Pielke, Sr has papers on inherent nite-time low biases that occur even at relatively pristine, rural sites. Because of these biases, he’s recommended that trends be determined from high temperatures only, and simply disregard the min temps. It would be very useful if the BEST team determines additional trends from just daily high temps.

RP Sr should be included in this study (if he isn’t already). At least he should be involved in any review process.

eadler (March 6, 2011 at 4:33 pm): You provided a link to my post on the revised monthly OHC data and a link to an annual GISTEMP LOTI curve and you somehow call them similar. Have you actually scaled one and compared them? Do you know if the two curves are in fact similar, other than having positive trends?

In the meantime, never mind the yipping from climate chihuahuas like Joe Romm over at Climate Progress who are trying to destroy the credibility of the project before it even produces a result (hmmm, where have we seen that before?) , it is simply the modus operandi of the fearful, who don’t want anything to compete with the “certainty” of climate change they have been pushing courtesy NOAA and GISS results.

One has to wonder: why, exactly, are they so afraid? Their position on this is definitely NOT the position of one who has confidence in his own work.

John Whitman says:

March 6, 2011 at 10:38 pm

I’m glad that you found the time to type up all your observations of concern. Let me be the first to second them. Indeed, if BEST accepts the time series of annual averages as presented by current versions of GHCN and USHCN as bona fide data, then they are doomed to repeat the historical distortions. No matter how sound their method(s) of mathematical estimation may be in principle, in practice it is the quality and the spatial distribution of the station data that ultimately limits the reliability. Unfortunately, there is no one on the BEST team with any practical experience in handling the peculiar problems of sparsely sampled geophysical data, even without the urban-biased siting of long-term land stations. Without any knowledge of what a high-quality temperature record sampled every second reveals in its power spectrum, I’m not even sure that such recondite issues as aliasing of harmonics of the diurnal cycle into the lowest frequencies and the difference between true daily averages and mid-range values obtained from Tmax and Tmin will be given their proper due.

AusieDan says:

March 7, 2011 at 5:08 am

“Correlation about 0.8 which means that about 64% of one, “explains” or coincides with the other, not bad for chaotic climate data.

To my mind that means the NCDC is not badly contaminated by UHI nor any of the shortcomings that we tend to concentrate upon.”

The cited zero-lag correlation is largely the product of coherent sub-decadal variations over a ~30-yr interval. “Trends” are the product of multidecadal, quasi-centennial and even longer irregular oscillations. It is precisely at these low frequencies that coherence between intensely urban and provincial town stations most often breaks down in very long station records. The concentration of attention upon fitted linear trends, rather than upon the cross-spectral disparity, is where the mistake is made. You can fit a linear trend to any bounded set of data. That does not mean that one is inherent in the underlying physical process.

beng says:

March 7, 2011 at 6:51 am

I’m not holding my breath.

Roger Pielke, Sr has papers on inherent nite-time low biases that occur even at relatively pristine, rural sites. Because of these biases, he’s recommended that trends be determined from high temperatures only, and simply disregard the min temps. It would be very useful if the BEST team determines additional trends from just daily high temps.

RP Sr should be included in this study (if he isn’t already). At least he should be involved in any review process.

Beng,

The fact is that AGW implies a larger increase in minimum night time temperatures, than daytime temperatures, because at that time, the downwelling radiation is the only factor sending energy to the earth’s surface since the sun is absent. In fact 2/3 of the increase in average temperature observed recently is because of increase in the nighttime minimum temperature.

If you desire to deny the AGW exists, then you clearly the best thing to do is to ignore the increase in the nighttime minimum temperature.

Eadler wrote:

“The fact is that AGW implies a larger increase in minimum night time temperatures, than daytime temperatures, because at that time, the downwelling radiation is the only factor sending energy to the earth’s surface since the sun is absent.”

And the anti-AGW counter-theory in fact implies that increase in nighttime temperatures is likely due to increase in concrete/asphalt jungle in surrounding areas, and reduction in impervious cover that reduces evaporative cooling. As result of lower albedo of pavement and associated increase in thermal mass, improved surfaces accumulate more heat during daytime, and cool off slowly at night increasing min readings. I am sure you must be familiar with this counter-argument.

eadler says:

March 7, 2011 at 5:26 am

sHx says:

March 6, 2011 at 10:41 pm

“It would make sense for Dr Menne to be a reviewer of the paper. It would improve the quality, assuming the editor is fair minded. In the case of the O’donnell the editor ended up publishing an improved version.”

Classic case of a conflict of interest. The concept is beyond eadler’s understanding.

“If you one attacks the institutions of science in the blogosphere, and then attempts to publish something, one should expect to get worked over a little. Scientists are only human. Turnabout is fair play.”

Humans are humans. Scientists are scientists. They are judged on different standards. Opposing the author of the paper that you are reviewing is not just a blow against another human, it is a blow against scientific method. For the latter, a scientist will pay for the rest of his life, the length of his reputation, and to the depth of his conscience. I hope someone keeps eadler away from younger students; otherwise, he will lead them straight to Hell.

Theo Goodwin says:

March 7, 2011 at 2:32 pm

“I hope someone keeps eadler away from younger students; otherwise, he will lead them straight to Hell.”

And he’ll do so instantly–on a radiative beam. Anyone who argues that “The fact is that AGW implies a larger increase in minimum night time temperatures, than daytime temperatures, because at that time, the downwelling radiation is the only factor sending energy to the earth’s surface since the sun is absent” should not be allowed to have any students, for he confuses radiative exhange with net thermal energy transfer. There is, of course, measurable LWIR backradiation from the night sky as part of that exchange, but the energy transfer is in the opposite direction. The unilluminated hemisphere invariably cools at night.

Bob Tisdale says:

March 7, 2011 at 10:05 am

eadler (March 6, 2011 at 4:33 pm): You provided a link to my post on the revised monthly OHC data and a link to an annual GISTEMP LOTI curve and you somehow call them similar. Have you actually scaled one and compared them? Do you know if the two curves are in fact similar, other than having positive trends?

Both having positive trends, to me, constitutes similar . It makes sense. The ocean is 70% of the earth’s surface and the heat capacity of the ocean is quite high. The surface temperature of the oceans even control the climate of the land. If the ocean is getting warmer , since warm water rises, the warming will be concentrated near the surface, where solar energy is absorbed. Ocean currents and oscillations will redistribute some of the ocean heat, so we won’t get the anomalies to scale exactly, but so what?

The advantage of ocean heat as an index is that there is no argument about records being influenced by the UHI.

Al Tekhasski says:

March 7, 2011 at 2:30 pm

Eadler wrote:

“The fact is that AGW implies a larger increase in minimum night time temperatures, than daytime temperatures, because at that time, the downwelling radiation is the only factor sending energy to the earth’s surface since the sun is absent.”

And the anti-AGW counter-theory in fact implies that increase in nighttime temperatures is likely due to increase in concrete/asphalt jungle in surrounding areas, and reduction in impervious cover that reduces evaporative cooling. As result of lower albedo of pavement and associated increase in thermal mass, improved surfaces accumulate more heat during daytime, and cool off slowly at night increasing min readings. I am sure you must be familiar with this counter-argument.

Comparison of rural only temperature data base, with the larger data base, shows that the temperature trends are the same. A number of papers have studied the UHI and found that it is not an important factor in explaining the increase in global average temperature.

http://www.fact-index.com/u/ur/urban_heat_island.html

* land, sea and borehole records are in reasonable agreement over the last century. [1]

* the trends in urban stations for 1951 to 1989 (0.10oC/decade) are not greatly more than those for all land stations (0.09 oC/decade)

* simlarly the rural trend is 0.70 oC/century from 1880 to 1998, which is actually larger than the full station trend (0.65 oC/century) (Peterson et al., GRL, 1999)

* the differences in trend between rural and all stations are also virtually unaffected by elimination of areas of largest temperature change, like Siberia, because such areas are well represented in both sets of stations.

A recent paper (“Assessment of urban versus rural in situ surface temperatures in the contiguous United States: No difference found”; J climate; Peterson; 2003) indicates that the effects of the urban heat island may have been overstated, finding that “Contrary to generally accepted wisdom, no statistically significant impact of urbanization could be found in annual temperatures.”. This was does by using satellite-based night-light detection of urban areas, and more thorough homogenisation of the time series (with corrections, for example, for the tendency of surrounding rural stations to be slightly higher, and thus cooler, than urban areas). As the paper says, if its conclusion is accepted, then it is necessary to “unravel the mystery of how a global temperature time series created partly from urban in-situ stations could show no contamination from urban warming”. The main conclusion is that micro- and local-scale impacts dominate the meso-scale impact of the urban heat island: many sections of towns may be warmer than rural sites, but meteorological observations are likely to be made in park “cool islands”

Theo Goodwin says:

March 7, 2011 at 2:32 pm

eadler says:

March 7, 2011 at 5:26 am

sHx says:

March 6, 2011 at 10:41 pm

“It would make sense for Dr Menne to be a reviewer of the paper. It would improve the quality, assuming the editor is fair minded. In the case of the O’donnell the editor ended up publishing an improved version.”

Classic case of a conflict of interest. The concept is beyond eadler’s understanding.

That would not be a conflict of interest. It is use of a person with expertise and a differing view to vet the paper, and improve the quality of the analysis and data, assuming the editor is doing his job properly.

A conflict of interest implies a monetary or personal motive which interferes with a person’s ability to be an impartial judge.

http://en.wikipedia.org/wiki/Conflict_of_interest

It would be undue bias if the editor who makes the decision, decides not to publish the paper because of personal animosity, or some other motive not related to the merits of the paper. It is OK if the reviewer has an opinion, if the editor can be impartial, and assigns multiple reviewers, which is normally the case with scientific papers.

eadler says:

March 7, 2011 at 4:55 pm

“Comparison of rural only temperature data base, with the larger data base, shows that the temperature trends are the same.”

Nonsense! One has to manufacture a data sausage spiced with an assortment of “adjustments” and “homogenizations” to get anywhere the same “trends.” But such cavalier mistreatment of actual measurements by the data-base keepers is what the AGW game–now in its second, spicier version–is all about.

@-Squidly says:

March 7, 2011 at 4:59 am

“…I no longer believe that CO2, or any other “greenhouse” gas can do as you claim. I no longer believe there is such a thing as a “greenhouse” gas, as a gas cannot A) trap anything, or B) back-radiate (which would be required).”

Your belief, or lack of it has absolutely zero effect on the empirically measured back-radiation from watwer vapour and CO2 that is monitered by this crowd…

http://www.bsrn.awi.de/

Note that their data includes clear spectra of the emitting molecules, H2O and CO2.

“…There is no evidence to support your point, either empirically or experimentally. This point also happens to be the very lynch pin of the entire AGW argument, for which again, there is absolutely no empirical evidence to support.”

Actually its the ‘lynch pin of the explanation of why the Earth is ~30degC warmer than it would be without an atmosphere. The physics is old, the theory of the (ill named) ‘greenhouse’ effect is around a century old and has not been refuted yet.

Certainly no alternative explanation has appeared which explains the surface warming of the Earth and Venus from the absorption of IR radiation by the atmosphere keeping the surface warmer than it would otherwise be.

The evidence for the atmospheric warming effect is widespread and easily accessed with modern media, your claim that there is ‘no evidence’ would seem to be the result of a lack or knowledge rather than a lack of evidence.

@izen:

And that ~30 C warmer was the same factor when we were “iceball earth” with higher CO2 levels and a temperature about 15 C colder than now? And that worked how again?

I think you have a problem trying to use dynamic data as a static proof (or: If you have a 1/3 or more error band on your “constant” it isn’t very constant… which implies the physics has an issue.)

Or would you like to rephrase that ~30 C to ~15C during Glacials (some of which have had higher CO2 than now…)

http://en.wikipedia.org/wiki/File:Vostok_Petit_data.svg

@-sky says:

March 7, 2011 at 3:54 pm

“There is, of course, measurable LWIR backradiation from the night sky as part of that exchange, but the energy transfer is in the opposite direction. The unilluminated hemisphere invariably cools at night.”

Yes, the NET energy exchange is from ground to space at night.

But the increased LWIR backradiation from the increased CO2 means the NET energy transfer is smaller than it would be without that radiative source.