An attempt at replicating Deepmind's Neural Turing Machines in Theano as a part of my bachelor's thesis.

Advisor: Prof. Amit Sethi

Here is the link to the paper: http://arxiv.org/abs/1410.5401

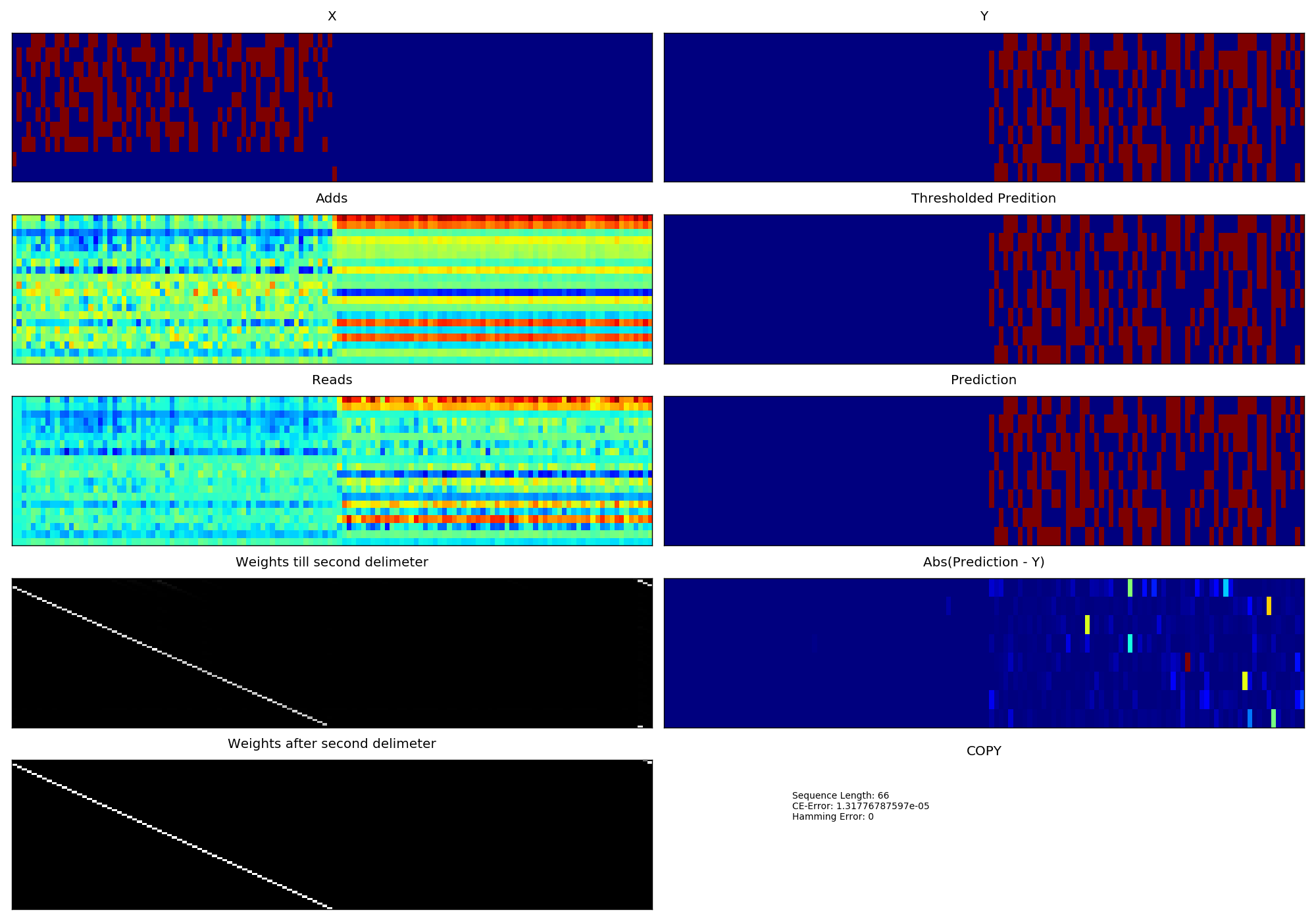

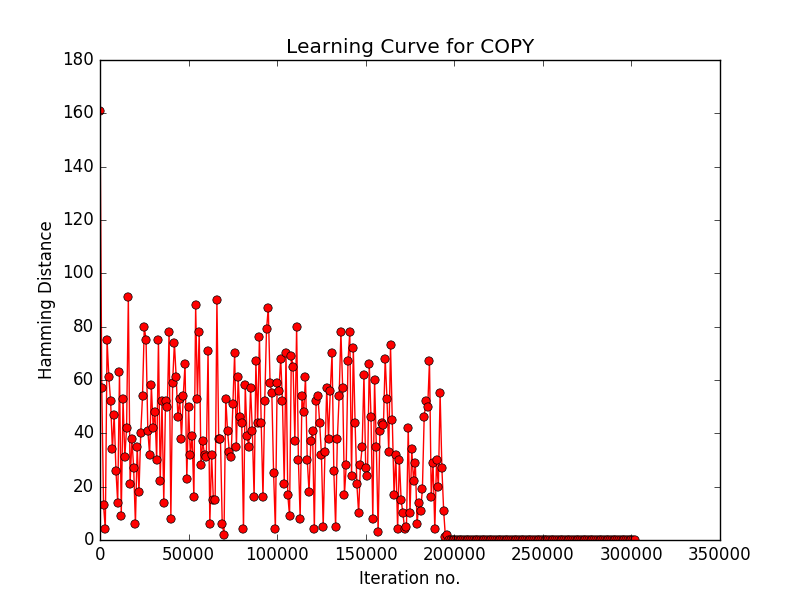

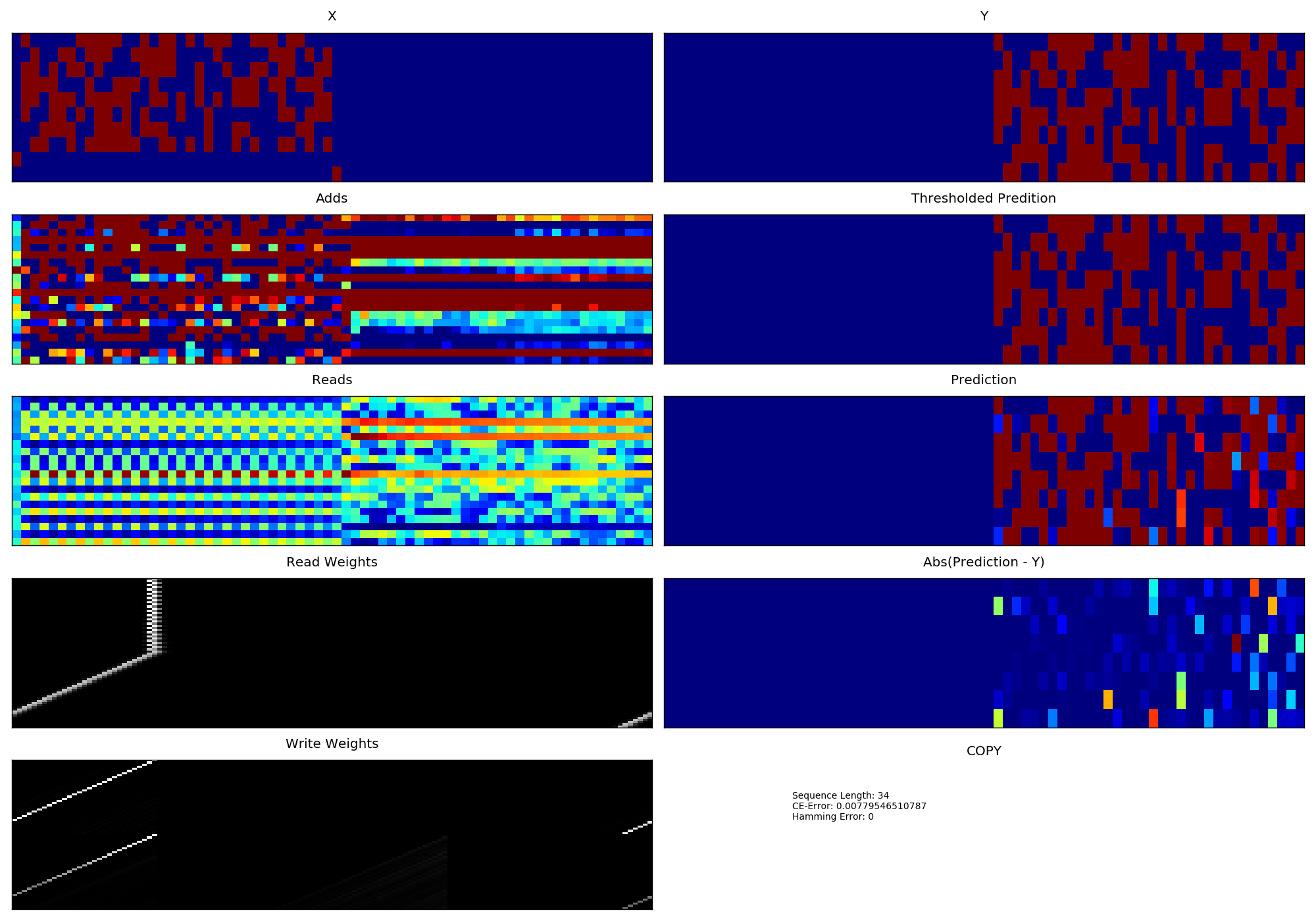

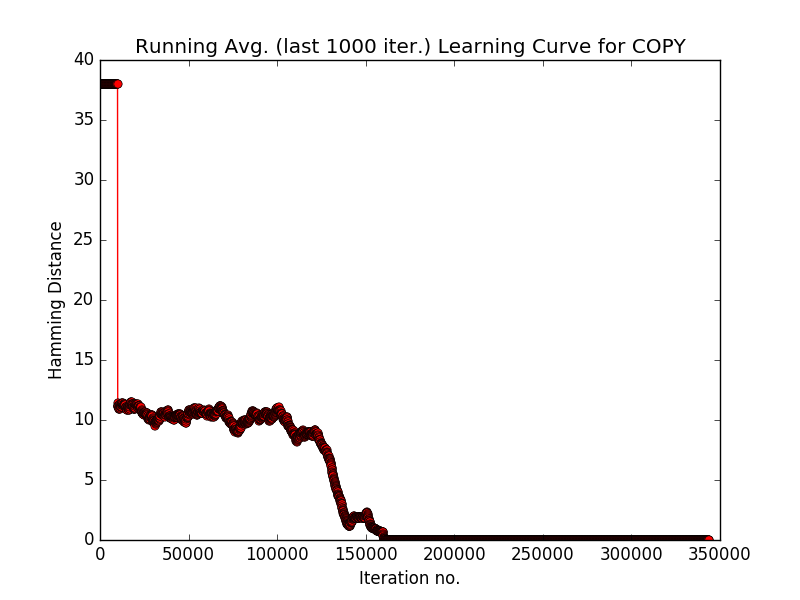

- Following are the results on COPY task of the NTM I implemented from scratch.

- Training is done on sequences of length varying from 1 to 20 and width 8 (bits per element in a sequence).

- With version 1 of NTM which has a single read-write head.

- Learning Curve corresponding to version 1 of NTM (Sorry I din't compute the sliding window average in version 1).

- With version 2 of NTM which has separate heads for reading and writing.

- Learning Curve corresponding to version 2 of NTM.

For training: In ntm_v*.py set

to_test = False

To run your trained model or a pre-trained model from model directory,

In ntm_v*.py set

to_test = True

test_path = path_to_npz_model_parameters_file

Please visit this link for my bachelor's thesis report.

Please visit this link for a presentation with comments, of my thesis.

Check out the reading material directory of this project on github for some relevant papers related to RAM based models.

- fumin very nicely explained NTM with a working implementation in GO. Check out: https://github.com/fumin/ntm

- Another NTM implementation in Theano by shawntawn. Check out: https://github.com/shawntan/neural-turing-machines

- Making NTM to work on other tasks described in the paper.

- Using NTM to make agents for playing games (Deep reinforcement learning).