There are many classes of reliability issues (e.g. failures/misconfigurations in intermediate networks) that we only find out about via direct, manual reports from users, or (for very widespread cases) we notice because traffic is 'missing' and below expected rates.

Some sort of 'external' monitoring is the usual solution to such blind spots, but of course such solutions come with their own false positive errors and other limitations (requires agreements with commercial providers; sometimes limited APIs for scraping result data; often reliability problems that are specific to the monitoring provider's infrastructure rather than something 'real'; generally such a provider's probes run within datacenters instead of on Internet edges / from within residential ISP networks; geographic distribution of provider's probes don't match userbase geographic distribution; characteristics of synthetic traffic doesn't necessarily match characteristics of real traffic; &c. &c.).

There's another option, which is asking browsers to send you an error report some fraction of the time when they can't fetch from your site. This is a W3C draft technical report, the Network Error Logging API, part of the broader Reporting API. Currently the NEL API is implemented and enabled by default in only Chrome >=71 and Edge >=79, but that's still a large fraction of all traffic and users.

Asking browsers to enable NEL is implemented by serving HTTP response headers Report-To and NEL, which together define a set of endpoints that can receive reports, sampling fractions for each of failures and successes, and a TTL for this entire definition to be stored in the user's browser. See Sample Policy Definitions.

Privacy concerns

See Sample Network Error Reports

Error reports are full of PII. They're sent from user IP addresses, contain the URL that the user was attempting to fetch, any Referer: from that original request, and in the future could optionally include specific request or response headers from the original request. They require TLS over the wire and deserve all the same protections that our logs data gets at rest.

v0: Minimum viable deployment:

- Legal review

- T259160 Privacy review

- Configure EventGate to receive NEL reports and store them in Logstash via Kafka.

- We'll likely use the exact same EventGate instances set up to receive client-side Javascript error reports in T226986.

- However, EventGate-Wikimedia will have to be modified, as NEL reports don't include EventGate-specific expected metadata fields. @Ottomata has prepared a simple patch that ought to allow us to set a reporting endpoint URI of something like https://intake-logging.wikimedia.org/v1/events?schema_uri=/network/error/logging/1.0.0&stream=network.error

-

Determine whether or not we want additional stream processing to split apart NEL responses into their component events, as each POST made to the reporting endpoint is potentially a batch of multiple error reports.EventGate does this already! - Modify EventGate to be compatible with the CORS headers required by Chrome: https://github.com/wikimedia/eventgate/pull/10 and https://gerrit.wikimedia.org/r/c/eventgate-wikimedia/+/623005

- Write a schema that matches the NEL specification and verify it validates reports generated by Chrome stable.

- Modify eventgate-logging-external's configuration to enable CORS mode T262087

- Deploy an eventgate-wikimedia with all of these changes. T262087

- Ensure some manually-constructed test events are making their way through Eventgate to Logstash: first document

- and are being parsed correctly: document w/ correct parsing

- Begin sending Report-To and NEL headers on our responses. https://gerrit.wikimedia.org/r/c/operations/puppet/+/627629

- The traffic layer seems like the right place to insert these headers. We should do a staged rollout, starting with a small fraction of traffic and with short TTLs, and expand once confident.

- Construct VCL that successfully emits JSON strings as response headers (surprisingly hard)

- Launch on group0 wiki domains

- Launch on group1 wiki domains

- Launch on all domains

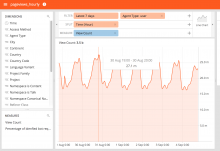

- Build a reasonably-nice Logstash dashboard to aggregate NELs.

v1: Improvements that aren't too hard

- T261340 Set up a "backwards GeoDNS" hostname that routes users to a faraway datacenter, or at least, a datacenter that won't be their usual primary datacenter. Use that hostname to receive error reports.

- Browsers are supposed to buffer reports and retry later if they can't send them the first time, but this will help us receive reports as outages are happening, not after they're resolved.

- There are possibly other alternatives to collecting reports via other-than-usual-datacenter endpoints: T261340#6437198

- Consider if we want NEL reports stored anywhere other than Logstash -- e.g. it might be useful to also have them in their own table in Hive.

- T263496 Augment the reported events with geoIP country data and AS number data (either as part of some sort of stream processing, or by adding a feature to eventgate-wikimedia)

Harder / more open-ended future work:

Attempt some sort of alerting based on receiving NELs.done, IRC alerting only- Set a nonzero success_fraction to also collect latency data for GeoDNS-mapping test URLs served at the edge; use that to improve our GeoDNS assignments.

- Consider setting up some off-WMF-infrastructure report collectors, taking extra care to keep them maintainable, secure, and to not store PII at rest there. This would all require careful planning and review, but the upside is we could get near-realtime data about user issues, despite those users being unable to reach any WMF infrastructure.