Workaround

To remove the deadlock it is recommended to disconnect Jenkins from the Gearman server and reconnect it. This is done on the https://integration.wikimedia.org/ci/manage page:

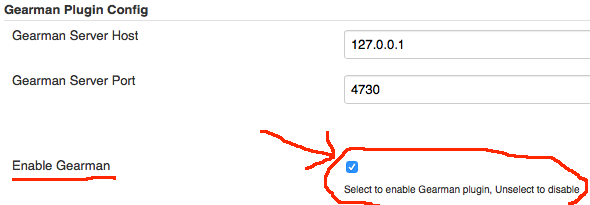

Uncheck the box, browse to the bottom and save. That removes the deadlock instantly. After a few seconds, check the box again and save.

If it still fail. Restart Jenkins entirely :(

Upstream bug is https://issues.jenkins-ci.org/browse/JENKINS-25867

From James' email to the QA list:

Beta Labs isn't synchronising; AFAICS it hasn't done so since ~ 11 hours

ago (15:10 UTC on 2014-09-08). I noticed this when prepping a patch for

tomorrow and found that.

Going to https://integration.wikimedia.org/ci/view/Beta/ I found that

"beta-update-databases-eqiad" had been executing for 12 hours, and

initially assumed that we had a run-away update.php issue again. However,

on examining it looks like "deployment-bastion.eqiad", or the jenkins

executor on it, isn't responding in some way:

pending—Waiting for next available executor on deployment-bastion.eqiad

I terminated the beta-update-databases-eqiad run to see if that would help,

but it just switched over to beta-scap-eqiad being the pending task.

Having chatted with MaxSem, I briefly disabled in the jenkins interface the

deployment-bastion.eqiad node and then re-enabled it, to no effect.

Any ideas?

November 2014 thread dump:

/ https://fastthread.io/my-thread-report.jsp?p=c2hhcmVkLzIwMTkvMDUvMy8tLWplbmtpbnMtdGhyZWFkcy1kdW1wLnR4dC0tMTAtMjctMw==July 2019 one:

Another threaddump P8736

https://fastthread.io/my-thread-report.jsp?p=c2hhcmVkLzIwMTkvMDcvMTEvLS10aHJlYWRkdW1wLnR4dC0tOS0zMC0zMg==