Computational Models attempt to explain the behavior of the brain using neural network simulations, but assumptions that help simulate one phenomenon are destructive to the simulation of another.

My previous posts discussed examples of unsolved problems in modern neuroscience from the difficulties of explaining working memory and its various properties, to the lack of a theory that can explain perception or the way we recognize a grandmother. I also talked about spontaneous activity and how it appears as if this activity is superfluous, if not utterly debilitating the capabilities of the brain to think.

What is common among those unsolved puzzles? They all need to be solved before we have a successful theory of the brain. The best that our mainstream theory today can do is to explain each of them separately. This means that as one introduces new ideas for functionality of brain’s neural networks that can explain one puzzle, this does not help explaining the others. The explanations that we offer nowadays remain narrow.

Building computational models to fit phenomenology

Let us start with an example of avalanche structure of spontaneous activity of the brain – a particular statistical structure with which spontaneous activity is organized. Researchers have tried to explain it with computer simulations (e.g., Benayoun et al. 2010; Hahn et al. 2014). Unfortunately, there seems to be no other way of building a simulation but by introducing some sort of a random process. Simulated neurons have to decide randomly whether they will send a signal to other neurons or not. At some point the simulation starts exhibiting properties of neural avalanches as we see them in real biological data. At the face of it this looks like a success in that it shows (at least potentially) that they may have established a connection between their assumptions, including randomness, and the real brain that points to how the brain could be creating these avalanches.

The problem is that there are too many such explanations, each working only for a small set of real phenomena. You need one type of explanation for spontaneous activity, another type for limited capacity of working memory , a third type for combining elementary features during perception, and so on. Nobody knows how to integrate all those explanations into a single theory.

Distinct assumptions put models in conflict

For example, the computational explanations of properties of working memory (e.g., Frank, Loughry & O’Reilly 2001; Grossberg & Pearson 2008) do not want to take the randomness properties from the avalanche models. Randomness would deteriorate the performance of their model and they risk not be able to make the simulation exhibit the properties of the real data.

And the same holds for models of perception. They not only need to avoid assumptions for explaining spontaneous activity, but possibly avoid also those used to explain some properties of working memory or attention. A set of assumptions successful for one phenomenon, gets in the way of another.

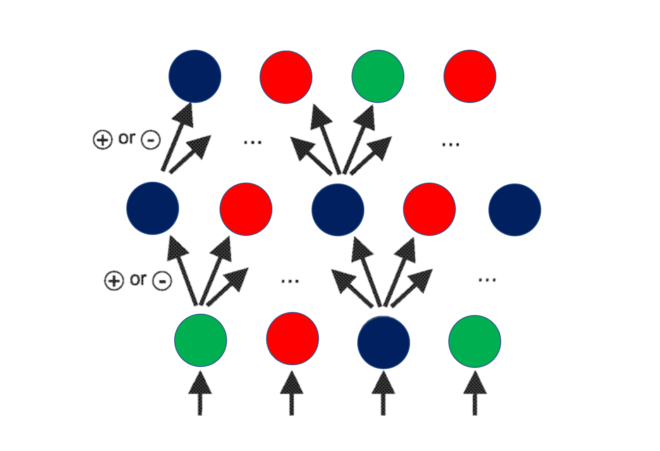

For example, a model of working memory may employ some sort of recurrent activity in order to maintain information in storage (e.g., Botvinick & Plaut 2006; Grossberg & Pearson 2008). Recurrence is when neurons feed forward in a chain like A to B, B to C, and so on but then at the end of the chain, the activity is fed back to its beginning i.e., to neuron A. The problem is again that recursion does not explain the phenomena of attention, or perception or spontaneous activity, or anything else. Essentially the network connectivity patterns assumed to explained each phenomenon are quite different.

Figure 1 (left): A) Connectivity that can simulate maintenance of information in working memory, B) connectivity that can produce attentional effects, C) Connectivity that produces simple object detection, used commonly in deep learning. D) Connectivity for more elaborate object detection.

Pluses indicate excitatory connections. Minuses indicate inhibitory connections.

Potential outcomes of an integrated model

The models that explain each phenomenon require a different set of assumptions. One cannot build a computational model of a network that has all these assumptions implemented simultaneously, all of them working concurrently and making sense. For example, this would require a model within which each neuron has the type of connectivity and randomness for creating avalanches, and on top of that recurrent connectivity to store information like working memory, and lateral connectivity necessary to account for the properties of attention, and the feed-forward and feedback connectivity to explain various phenomena of object perception. Everything should be mingled together within one network.

What would happen? Would such a network suddenly start working like a human brain? If we amalgamate in that way hundreds of existing simulation models, would the resulting network explain everything the individual models have explained? Would it in addition, suddenly obtain some emergent properties that explain experimental data that one did not even intend to explain? In other words, would one maybe obtain more explanatory power with a combined model than with the individual ones?

Unfortunately, this is not the case. The truth is that the opposite would happen. Such a system would quickly break down. It would suddenly stop doing anything useful. The network would no longer behave in accordance with the data of spontaneous activity, nor would it mimic the properties of working memory, nor would it agree with anything else we know about the brain. Most likely, such an effort would result in utter mess.

A lateral connection that is good for attentional filtering may be bad for detecting features of a grandmother. A feed-forward connectivity effective in detecting grandmothers may not be good for maintaining information in working memory. Random spontaneous activity needed to explain avalanches may make the system lose information even before it closed the recurrent cycle of working memory or before the activity reaches the top levels of the object detection hierarchy.

And one should not forget that I am describing here only a small sample of the actual brain and behavioral phenomena that would need to be explained. In my previous posts I described multiple phenomena within working memory and perception that are already difficult to explain. And there are many more. Some of these additional topics are decision making (Link, 1992; Nikolić & Gronlund, 2002), creativity (Getzels & Jackson, 1962), memory storage and retrieval (Shiffrin & Atkinson, 1969), synaesthesia (e.g., Mroczko et al., 2009), and numerous anatomical and physiological properties (Olshausen & Field, 2006), including oscillatory activity (Fries, Nikolić & Singer, 2007). And of course, there is an entire corpus of phenomena commonly referred to as consciousness, which mainstream brain theory does not even know how to begin explaining (e.g., Dennett, 1993; Chalmers, 1995).

Figure 2: A circuit capable of producing oscillatory activity on its own, but probably debilitating the performance of all other connectivity structures in Figure 1 if added to each neuron.

Need for a new strategy

Computational neuroscience faces an immense challenge when it comes to explaining the mind. Neuroscience as a discipline is struggling. Our computational explanations are disjointed and not able to wrap things up. This is not only a technical problem or a problem of getting the right empirical data. This is equally an epistemic problem and a problem of a general strategy on how to even approach such a formidable question as is “How does the brain foster mind?”. Quite possibly some fundamental things are still missing in our overall framework that go beyond networks of neurons.

Notably, when it comes to the influence on society and general public, philosophy is about as successful in informing the world on the mind and brain issues as is neuroscience. This is despite only a small fraction of the total money invested in that research being directed towards philosophical efforts. So, looking at it in this way, philosophy is tremendously successful. Why? I think this is because their job is the big picture. And we, as neuroscientists, still have not got the big picture right. And philosophers have a lot to say about that. They are pretty good in at least two things that neuroscience can profit from: pointing out our limitations (e.g., Chalmers, 1995), or suggesting new directions.

The author, Danko Nikolić, is affiliated with savedroid AG, Frankfurt Institute for Advanced Studies and Max Planck Institute for Brain Research

References

Benayoun, M., Cowan, J. D., van Drongelen, W., & Wallace, E. (2010). Avalanches in a stochastic model of spiking neurons. PLoS computational biology, 6(7), e1000846.

Botvinick, M. M., & Plaut, D. C. (2006). Short-term memory for serial order: a recurrent neural network model. Psychological review, 113(2), 201.

Chalmers, D. J. (1995). Facing up to the problem of consciousness. Journal of consciousness studies, 2(3), 200-219.

Dennett, D. C. (1993). Consciousness explained. Penguin uk.

Getzels, J. W., & Jackson, P. W. (1962). Creativity and intelligence: Explorations with gifted students.

Grossberg, S., & Pearson, L. R. (2008). Laminar cortical dynamics of cognitive and motor working memory, sequence learning and performance: toward a unified theory of how the cerebral cortex works. Psychological review, 115(3), 677.

Frank, M. J., Loughry, B., & O’Reilly, R. C. (2001). Interactions between frontal cortex and basal ganglia in working memory: a computational model. Cognitive, Affective, & Behavioral Neuroscience, 1(2), 137-160.

Fries, P., Nikolić, D., & Singer, W. (2007). The gamma cycle. Trends in neurosciences, 30(7), 309-316.

Hahn, G., Bujan, A. F., Frégnac, Y., Aertsen, A., & Kumar, A. (2014). Communication through resonance in spiking neuronal networks. PLoS computational biology, 10(8), e1003811.

Link, S. W. (1992). The wave theory of difference and similarity. Psychology Press.

Mroczko, A., Metzinger, T., Singer, W., & Nikolić, D. (2009). Immediate transfer of synesthesia to a novel inducer. Journal of Vision, 9(12), 25-25.

Nikolić, D., & Gronlund, S. D. (2002). A tandem random walk model of the SAT paradigm: Response times and accumulation of evidence. British Journal of Mathematical and Statistical Psychology, 55(2), 263-288.

Olshausen, B. A., & Field, D. J. (2006). What is the other 85 percent of V1 doing. L. van Hemmen, & T. Sejnowski (Eds.), 23, 182-211.

Shiffrin, R. M., & Atkinson, R. C. (1969). Storage and retrieval processes in long-term memory. Psychological Review, 76(2), 179.

Dear Jan,

Very interesting. I agree that we need something “completely” new. Please stay tuned. I will be suggesting some surprisingly new ideas.

Danko

Please keep in mind that in biology one is not dealing with silicon chips but with water (H2O) solutions and membranes.

Good point.

One major thing that’s missing from computational neuroscience is any consideration of the analog aspect of brain function. In real life, the digital, on-off action potentials carried by single axons are far from the only game in town. Clusters of dendrites and extracellular local field potentials are at least as important — and in the case of some of the functions mentioned, almost certainly more so.

As Jan Lakota suggests, we’re not computers. We are biological organisms. You cannot simulate a brain solely using metaphors derived from digital technology.

it’s possible I missed something here, but why would we want to assume all brain regions have the same architecture? (Correct me if I’m wrong, but it sounds to me taht the whole crisis argument requires this assumption). Why can’t it be that a model that explains visual processes with architecture D models the function of visual areas, while a model architecture akin to A models prefrontal processes? We know there are marked anatomical differences between these regions, so the assumption of diferenciación seems reasonable.

I was wondering the same thing. AFAIK, even concepts that connect multiple brain regions like large scale brain networks do not typically require that the different regions have homogeneous architecture. I’d love to know what I am missing. Cheers!

One reason an anatomical separation of architecture may not work is that function is not anatomically confined. See this post for an example. https://sapienlabs.org/stimulation-sensation-localization-cortex/

I am a second year PhD student in computational neuroscience. After reading this I am wondering what kind of project to do for my thesis which will be useful.

Consider doing an “anti-” project. Do something that would expose the problems of computational models. For example, one could try building a superimposed model out of multiple individual models and see what heppens. Then one can analyze the combined system and understand better where and why the limitations emerge. If done well, something like this could be incredibly useful and could make a tremendous impact on the field.

Nice commentary. But is giving up the solution? Just because we haven’t solved the problem doesn’t mean we should stop right? Get to work! ?

Rune,

Yes, of course. We need to get to work.

We are working