Perf Matters at Wikipedia in 2015

By Timo Tijhof, Principal Engineer, Wikimedia Performance Team

Hello, WANObjectCache

This year we achieved another milestone in our multi-year effort to prepare Wikipedia for serving traffic from multiple data centres.

The MediaWiki application that powers Wikipedia relies heavily on object caching. We use Memcached as horizontally scaled key-value store, and we’d like to keep the cache local to each data centre. This minimises dependencies between data centres, and makes better use of storage capacity (based on local needs).

Aaron Schulz devised a strategy that makes MediaWiki caching compatible with the requirements of a multi-DC architecture. Previously, when source data changed, MediaWiki would recompute and replace the cache value. Now, MediaWiki broadcasts “purge” events for cache keys. Each data centre receives these and sets a “tombstone”, a marker lasting a few seconds that limits any set-value operations for that key to a miniscule time-to-live. This makes it tolerable for recache-on-miss logic to recompute the cache value using local replica databases, even though they might have several seconds of replication lag. Heartbeats are used to detect the replication lag of the databases involved during any re-computation of a cache value. When that lag is more than a few seconds (a large portion of the tombstone period), the corresponding cache set-value operation automatically uses a low time-to-live. This means that large amounts of replication lag are tolerated.

This and other aspects of WANObjectCache’s design allow MediaWiki to trust that cached values are not substantially more stale, than a local replica database; provided that cross-DC broadcasting of tiny in-memory tombstones is not disrupted.

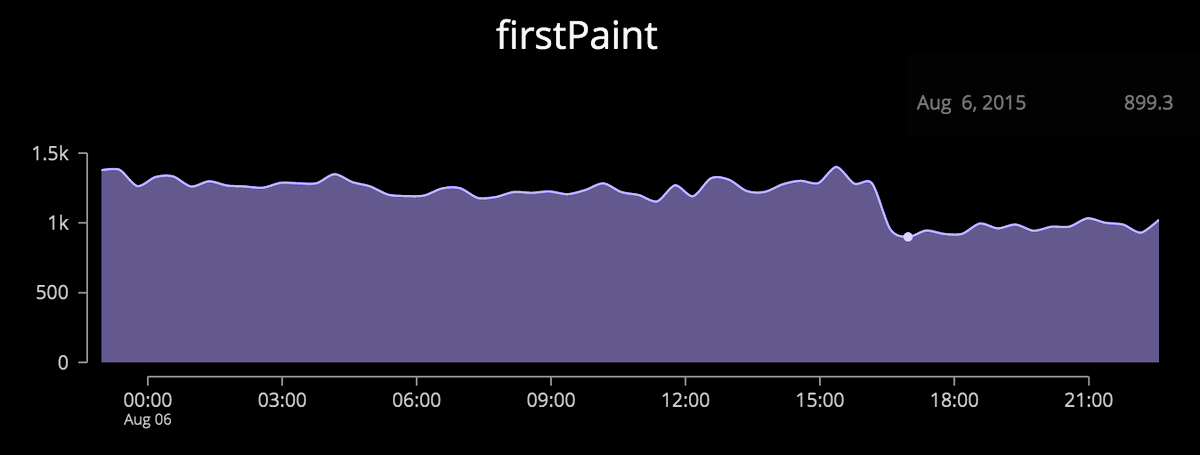

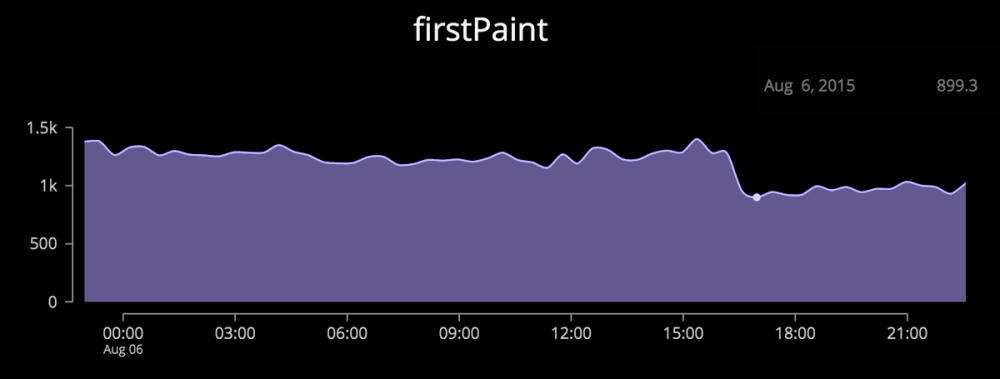

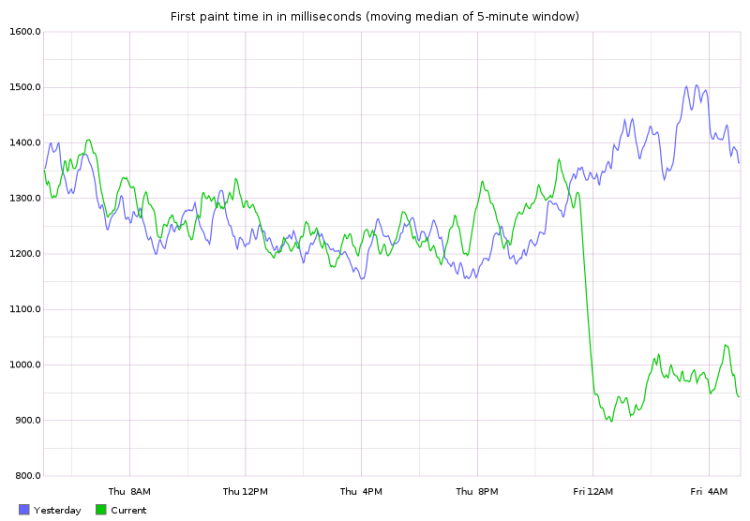

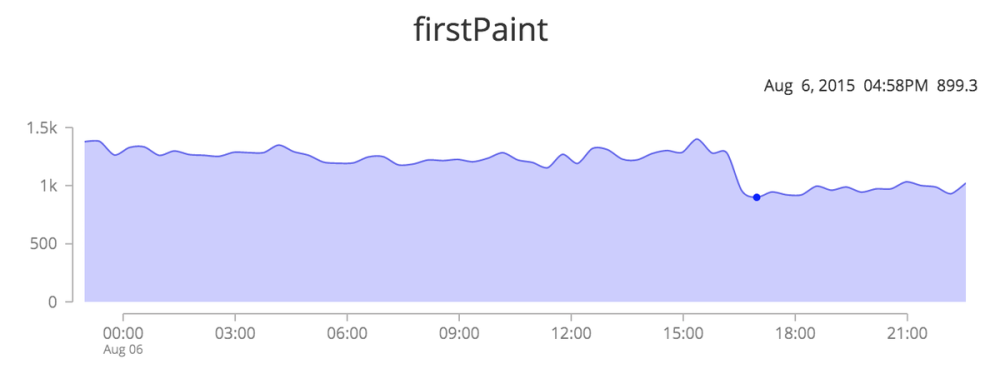

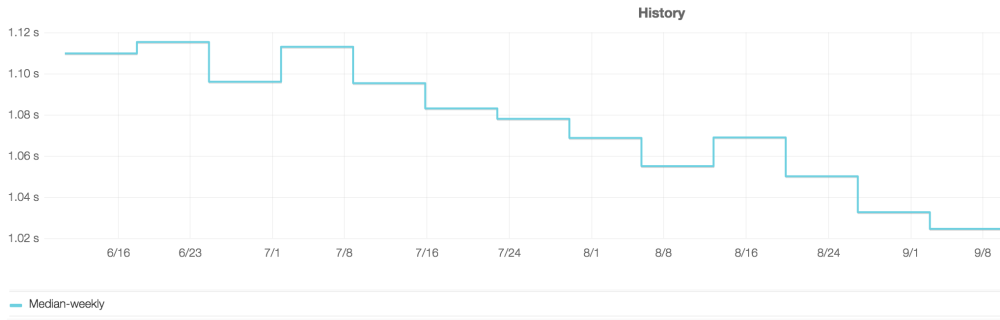

First paint time now under 900ms

In July we set out a goal: improve page load performance so our median first paint time would go down from approximately 1.5 seconds to under a second – and stay under it!

I identified synchronous scripts as the single-biggest task blocking the browser, between the start of a page navigation and the first visual change seen by Wikipedia readers. We had used async scripts before, but converting these last two scripts to be asynchronous was easier said than done.

There were several blockers to this change. Including the use of embedded scripts by interactive features. These were partly migrated to CSS-only solutions. For the other features, we introduced the notion of “delayed inline scripts”. Embedded scripts now wrap their code in a closure and add it to an array. After the module loader arrives, we process the closures from the array and execute the code within.

Another major blocker was the subset of community-developed gadgets that didn’t yet use the module loader (introduced in 2011). These legacy scripts assumed a global scope for variables, and depended on browser behaviour specific to serially loaded, synchronous, scripts. Between July 2015 and August 2015, I worked with the community to develop a migration guide. And, after a short deprecation period, the legacy loader was removed.

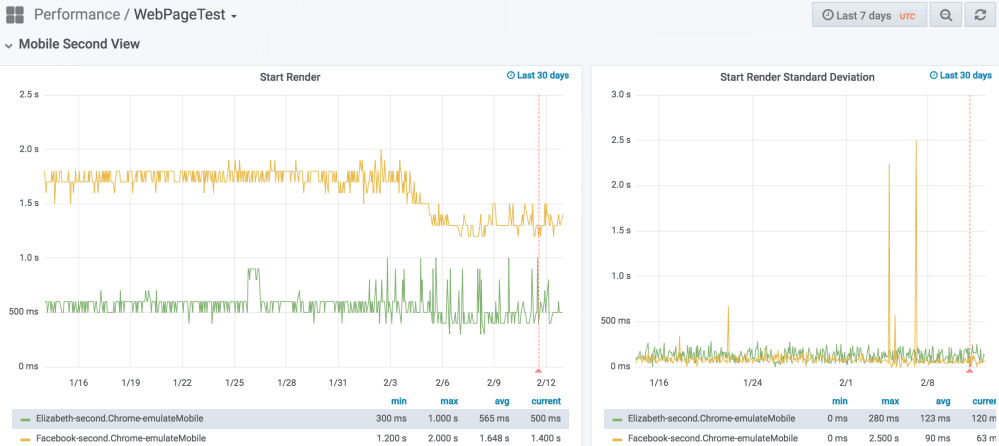

Hello, WebPageTest

Previously, we only collected performance metrics for Wikipedia from sampled real-user page loads. This is super and helps detect trends, regressions, and other changes at large. But, to truly understand the characteristics of what made a page load a certain way, we need synthetic testing as well.

Synthetic testing offers frame-by-frame video captures, waterfall graphs, performance timelines, and above-the-fold visual progression. We can run these automatically (e.g. every hour) for many urls, on many different browsers and devices, and from different geo locations. These tests allow us to understand the performance, and analyse it. We can then compare runs over any period of time, and across different factors. It also gives us snapshots of how pages were built at a certain point in time.

The results are automatically recorded into a database every hour, and we use Grafana to visualise the data.

In 2015 Peter built out the synthetic testing infrastructure for Wikimedia, from scratch. We use the open-source WebPageTest software. To read more about its operation, check Wikitech.

The journey to Thumbor begins

Gilles evaluated various thumbnailing services for MediaWiki. The open-source Thumbor software came out as the most promising candidate.

Gilles implemented support for Thumbor in the MediaWiki-Vagrant development environment.

To read more about our journey to Thumbor, read The Journey to Thumbor (part 1).

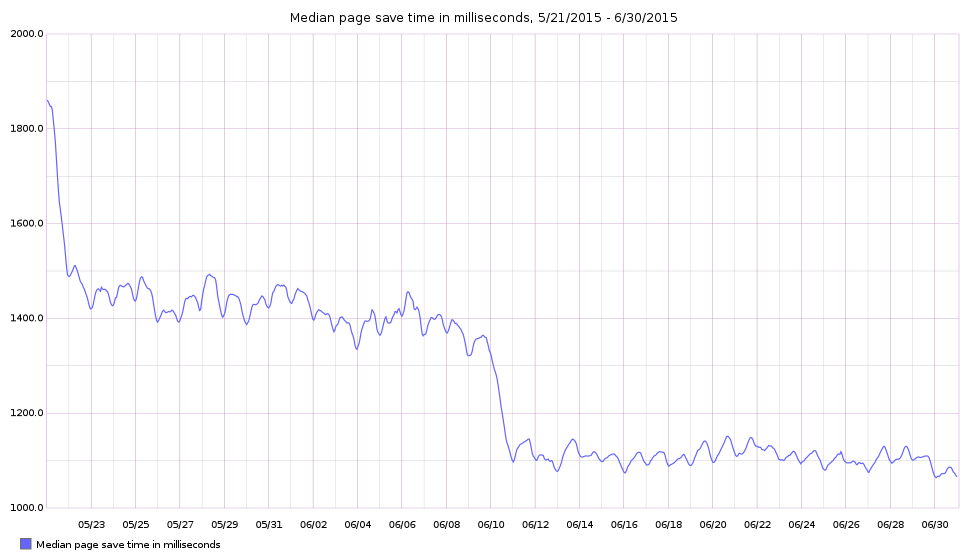

Save timing reduced by 50%

Save timing is one of the key performance metrics for Wikipedia. It measures the time from when a user presses “Publish changes” when editing – until the user’s browser starts to receive a response. During this time, many things happen. MediaWiki parses the wiki-markup into HTML, which can involve page macros, sub-queries, templates, and other parser extensions. These inputs must be saved to a database. There may also be some cascading updates, such as the page’s membership in a category. And last but not least, there is the network latency between user’s device and our data centres.

This year saw a 50% reduction in save timing. At the beginning of the year, median save timing was 2.0 seconds (quarterly report). By June, it was down to 1.6 seconds (report), and in September 2015, we reached 1.0 seconds! (report)

The effort to reduce save timing was led by Aaron Schulz. The impact that followed was the result of hundreds of changes to MediaWiki core and to extensions.

Deferring tasks to post-send

Many of these changes involved deferring work to happen post-send. That is, after the server sends the HTTP response to the user and closes the main database transaction. Examples of tasks that now happen post-send are: cascading updates, emitting “recent changes” objects to the database and to pub-sub feeds, and doing automatic user rights promotions for the editing user based on their current age and total edit count.

Aaron also implemented the “async write” feature in the multi-backend object cache interface. MediaWiki uses this for storing the parser cache HTML in both Memcached (tier 1) and MySQL (tier 2). The second write now happens post-send.

By re-ordering these tasks to occur post-send, the server can send a response back to the user sooner.

Working with the database, instead of against it

A major category of changes were improvements to database queries. For example, reducing lock contention in SQL, refactoring code in a way that reduces the amount of work done between two write queries in the same transaction, splitting large queries into smaller ones, and avoiding use of database master connections whenever possible.

These optimisations reduced chances of queries being stalled, and allow them to complete more quickly.

Avoid synchronous cache re-computations

The aforementioned work on WANObjectCache also helped a lot. Whenever we converted a feature to use this interface, we reduced the amount of blocking cache computation that happened mid-request. WANObjectCache also performs probabilistic preemptive refreshes of near-expiring values, which can prevent cache stampedes.

Profiling can be expensive

We disabled the performance profiler of the AbuseFilter extension in production. AbuseFilter allows privileged users to write rules that may prevent edits based on certain heuristics. Its profiler would record how long the rules took to inspect an edit, allowing users to optimise them. The way the profiler worked, though, added a significant slow down to the editing process. Work began later in 2016 to create a new profiler, which has since completed.

And more

Lots of small things. Including the fixing of the User object cache which existed but wasn’t working. And avoid caching values in Memcached if computing them is faster than the Memcached latency required to fetch it!

We also improved latency of file operations by switching more LBYL-style coding patterns to EAFP-style code. Rather than checking whether a file exists, is readable, and then checking when it was last modified – do only the latter and handle any errors. This is both faster and more correct (due to LBYL race conditions).

So long, Sajax!

The Sajax library had a utility for creating an XMLHttpRequest object in a cross-browser-compatible way. MediaWiki deprecated Sajax in favour of jQuery.ajax and the MediaWiki API. Yet, years later in 2015, this tiny part of Sajax remained popular in Wikimedia’s ecosystem of community-developed gadgets.

Sajax was a library for invoking a subroutine on the server, and receiving its return value as JSON from client-side JavaScript. In March 2006, it was adopted in MediaWiki to power the autocomplete feature of the search input field.

The legacy library was loaded by default on all Wikipedia page views for nearly a decade. During a performance inspection this year, Ori Livneh decided it was high time to finish this migration. Goodbye Sajax!

Further reading

This year also saw the switch to encrypt all Wikimedia traffic with TLS by default.