Measuring the performance of Wikipedia visitors’ devices

By Gilles Dubuc, Wikimedia Performance

Background

As a dedicated performance team, we’ve reported the long-term evolution of Wikipedia’s web performance for years. Most years we’ve managed to achieve very significant year-over-year improvements on key performance metrics. However, knowing that internet users constantly upgrade their devices, that browsers get performance improvements of their own, and that the speed of the internet itself keeps improving as a whole, we started to wonder how much of these yearly improvements were our own doing, and how much was due to the web’s environment getting better on its own.

To answer the question of how fast internet speeds are improving for our audience, we gather data exposed by the Network Information API, which provides information about the effective connection experienced recently by visitors.

To figure out if browser updates bring performance improvements or regressions to our visitors, we look at the User Agent string and compare the performance metrics of consecutive versions of a given browser as new ones are being rolled out.

We are also curious about how fast the device is. On Android, most devices expose a reasonably unique name in their User Agent string, which lets a website figure out the make and model of the device. This can be cross-referenced to a database of technical specifications, or even benchmark scores by phone reviewers, to estimate how powerful a device is.

However, this strategy only works for Android — as Apple doesn’t let the browser know which generation of iOS device it’s dealing with. This technique also falls short when it comes to variations in device performance between different identical models. Different people use their devices differently, and some devices can be considerably slowed down by apps running in the background or when their free storage runs low. More importantly, most mobile devices have a CPU/GPU throttling mechanism that slows down the device on purpose when the battery is low. As a result, an average mobile device can easily start performing like a low-end one when it’s close to running out of battery. In that case, the technical specifications and ideal battery conditions benchmark scores matter little in practice. There is currently no API telling the browser that the device is throttled by a battery-saving mode, which means that we can’t know that directly.

Microbenchmark

Lacking any existing ready-made API to gather information about how powerful a device is (and by that we really mean the combination of device, operating system and browser), we set out to design a novel technique to estimate this information.

Like any other performance measurement, we strive to have as little impact on the performance itself when we make measurements and collect data. Web performance monitoring can’t fully avoid the observer effect, but we can try to minimize it. As a matter of principle, we only measure the performance of a sample of visitors, typically 1 in every 1000 pageviews.

We decided to create a microbenchmark measuring the processing speed of the client at a given time, shortly after the page has loaded. In order to avoid interfering with the execution of the main thread on the current page, we used a Web Worker, which runs on a separate thread. We also manually tuned it to run in a short amount of time (less than 500ms for most clients), in order to minimize the amount of wasted processing.

To verify that it worked, we looked at the scores on a variety of real-world devices. We were able to verify that scores stay within a small range on a given device over time, that the score worsens on low battery mode, and that differences between two different devices of the same make and model can be important.

Findings

We have been collecting these microbenchmark scores for over a year now, which lets us see the long-term evolution of our audience as a whole. This is the information we were looking for, to get an idea of how fast the device/operating system/browser environment improves on its own.

Between April 2019 and April 2020 the median processing speed of our visitors improved by 19% as a whole. That’s a huge difference! While device processing speed isn’t the only possible bottleneck for the effective web performance experienced by users, such a large change should influence global performance metrics. As a result, it’s fair to say that if your performance metrics stayed stable during that year, it’s likely that your performance actually regressed. Your website’s performance regression was compensated by the environmental improvement of internet users’ device/OS/browser stacks as a whole. Likewise, even if you saw some performance gains in your real user monitoring, they might not have been as large as they could have been.

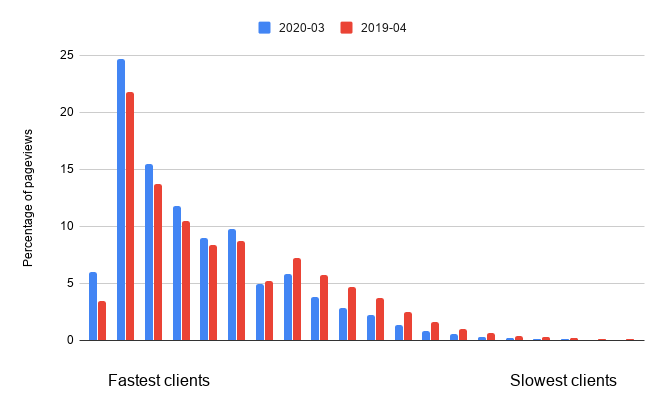

When I shared this preliminary result on Twitter, Alex Russell rightfully asked if this was an evenly distributed improvement or if high-end device upgrades pulled the overall scores forward while the situation might have been more slow-moving or stagnant for low-end devices? Looking at the evolution of microbenchmark scores distribution over time, it appears that it’s really the whole range of devices becoming faster over time:

We can clearly see the global shift towards faster clients, for slow and fast ones alike.

Conclusion

Our microbenchmark technique seems like the best thing we can do currently to measure the performance of the device/os/browser stacks of our visitors. However, it suffers from being a bit naive; it only measures a short amount of time that could be unusually busy or quiet; it wastes some processing cycles and a little battery for sampled visitors. Ideally, we would want to replace this technique with a more passive collecting method, similar to the Network Information API, where the browser would measure its own recent processing speed based on work it would do anyway. The big challenge to create something like this is to standardize it, as different browsers have different execution models. If such work happened it could yield interesting benefits for adaptive experiences, where a website could detect that the device is (possibly temporarily) underpowered and would adapt the user experience accordingly. This is the kind of feature web developers have already built to adapt to network conditions.

We hope that this work will inspire others to study their own audience more, and don’t take their long-term performance improvements for granted. There is a lot of work to be done to be able to fully remove the environmental factors from real user monitoring data and to understand the website’s share of responsibility in performance changes.

About this post

Featured image credit: Autobahn A5 mit Flughafen Frankfurt, Norbert Nagel, CC BY-SA 3.0