Ceph distributed VM storage coming to Cloud Services

By Andrew Bogott, Senior Site Reliability Engineer

When the Wikimedia cloud platform was first designed in 2011, it relied heavily on distributed storage. Home directories, project storage, and VM root drives were all located on a GlusterFS file system spread out over a cluster of servers. Gluster was new; we were early adopters, and our use case was outside the initial expectations of the Gluster designers. The result was a bit ugly. Every week or two the filesystem would get overwhelmed and fall into a ‘split-brain state’ where different hosts in the cluster had different ideas about what the latest version of a file looked like; then admins would have to shut everything down, go through the logs, and make unilateral choices about which file versions lived and which died. Often there was data corruption followed by the inevitable apologetic admin emails: “Sorry, your VM was corrupted and will have to be rebuilt. Remember: cattle, not pets!”

As is often the case with young projects, every fixed bug had a new bug hiding behind it. It eventually became clear that we weren’t going to be able to provide stability for our users with GlusterFS. Shared files (home directories, etc.) moved to NFS. VM storage was moved off of shared systems entirely.

That is essentially how things remain: each VM has a single, fixed-size drive that is stored on a single physical host (aka ‘hypervisor’). This approach has served us surprisingly well — RAID storage means that when individual drives fail, we can generally replace them before any data is lost, and we’ve never had a major loss of VM data. Despite this success, I’ve spent the last five years waiting for the other shoe to drop, because local VM storage has some serious disadvantages:

- If a hypervisor does suffer total failure (e.g. a misbehaving drive controller), all VMs hosted on that system could be corrupted or lost. Some of our hypervisors host as many as 80 VMs at once. As easy as it is to say ‘cattle, not pets,’ it’s clear that most volunteer-run projects don’t have the resources to maintain a fully-puppetized, easily recreated setup; such a failure would be extremely disruptive.

- Shutting down a hypervisor for maintenance means shutting down all the VMs hosted there. This is one of the main causes of those ‘Instance Downtime’ emails that we send out periodically.

- If we want to replace or re-image a hypervisor, we have to copy the complete contents of every VM off of the hypervisor first. This takes many hours, and results in yet more ‘Instance Downtime’ emails. When the VMs that need moving are part of a larger cluster, this involves all kinds of elaborate load-balancing and failover dances that require special knowledge of each service involved.

- File storage is strictly bound to individual VMs. If someone wants to rebuild a VM with a different operating system or just get a fresh start, they have to download all of their data first, or arrange for a complicated VM-to-VM copying process.

Nearly a decade has elapsed since our original experience with shared storage, and the bruises have mostly healed. In recent years, it’s been more and more obvious that no one building a new cloud today is doing things the way we are; for must public cloud providers, the amount of risk and downtime that comes along with local storage would be simply unacceptable.

Some of these other cloud providers are using a much-more-advanced-and-finally-ready-for-prime-time GlusterFS setup. Even more are using a different storage solution, Ceph. Based on conventional wisdom (and remaining trauma associated with Gluster), we’ve decided to adopt Ceph as our new storage system. Last year, Jason Hedden built a small proof-of-concept Ceph cluster and convinced us that it’s a viable option. A few minor WMCS projects have been running on Ceph for several months. Jason has since left to pursue other opportunities, but after many budget discussions and hardware orders, we are now ready to build out a full-sized cluster that will eventually support storage for the entire WMCS platform.

The first use of the new cluster will be to host the most cattle-like VMs in our platform, Toolforge Kubernetes worker nodes. As we iron out various issues and bugs we’ll gradually move more and more VMs off of local storage.

Once everything is ceph-hosted we’ll start to see the first big payoff–‘live migration’ of VMs. With live migration, we can transfer a virtual server from one physical host to another with no interruption in downtime. That will finally allow us to do needed hypervisor maintenance without imposing downtime on our users. Ceph will also maintain multiple replicas of every block of VM data, which will cause us to lose a lot less sleep worrying about data loss from hypervisor failure.

Once we’ve secured better stability and uptime for VMs, we hope to also implement attachable block storage. That will allow users to attach additional storage to running virtual servers, and even detach that storage and reattach it to a different server.

We’re planning to be cautious in our progress to this new platform, so it may be several weeks or months before you start hearing maintenance & downtime announcements about the move. When you do, you can rejoice in knowing that they might be our last.

About this post

This is 1 of a 2 part series of posts on Ceph and Wikimedia Cloud Services. Read Part 2.

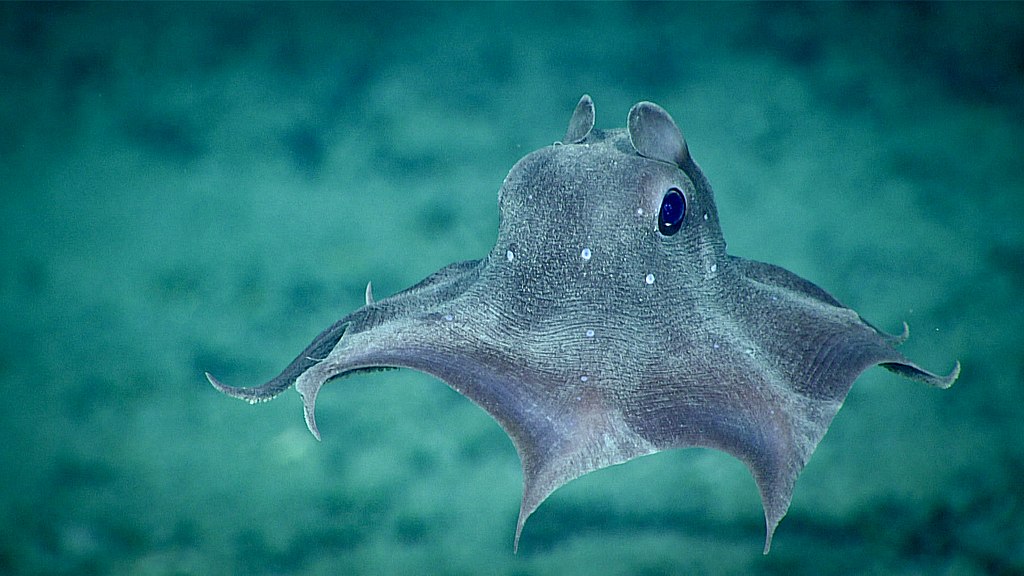

Featured image credit: Dumbo Octopus, NOAA, CC BY-SA 2.0